Medical devices are incredibly vulnerable to hacking attacks. In some cases it’s because of software defects that allow for exploits, like buffer overflows, SQL injection or insecure direct object references. In other cases, you can blame misconfigurations, lack of encryption (or weak encryption), non-secure data/control networks, unfettered wireless access, and worse.

Medical devices are incredibly vulnerable to hacking attacks. In some cases it’s because of software defects that allow for exploits, like buffer overflows, SQL injection or insecure direct object references. In other cases, you can blame misconfigurations, lack of encryption (or weak encryption), non-secure data/control networks, unfettered wireless access, and worse.

Why would hackers go after medical devices? Lots of reasons. To name but one: It’s a potential terrorist threat against real human beings. Remember that Dick Cheney famously disabled the wireless capabilities of his implanted heart monitor for fear of an assassination attack.

Certainly healthcare organizations are being targeted for everything from theft of medical records to ransomware. To quote the report “Hacking Healthcare IT in 2016,” from the Institute for Critical Infrastructure Technology (ICIT):

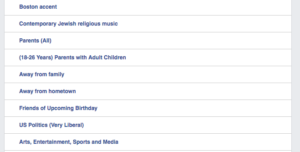

The Healthcare sector manages very sensitive and diverse data, which ranges from personal identifiable information (PII) to financial information. Data is increasingly stored digitally as electronic Protected Health Information (ePHI). Systems belonging to the Healthcare sector and the Federal Government have recently been targeted because they contain vast amounts of PII and financial data. Both sectors collect, store, and protect data concerning United States citizens and government employees. The government systems are considered more difficult to attack because the United States Government has been investing in cybersecurity for a (slightly) longer period. Healthcare systems attract more attackers because they contain a wider variety of information. An electronic health record (EHR) contains a patient’s personal identifiable information, their private health information, and their financial information.

EHR adoption has increased over the past few years under the Health Information Technology and Economics Clinical Health (HITECH) Act. Stan Wisseman [from Hewlett-Packard] comments, “EHRs enable greater access to patient records and facilitate sharing of information among providers, payers and patients themselves. However, with extensive access, more centralized data storage, and confidential information sent over networks, there is an increased risk of privacy breach through data leakage, theft, loss, or cyber-attack. A cautious approach to IT integration is warranted to ensure that patients’ sensitive information is protected.”

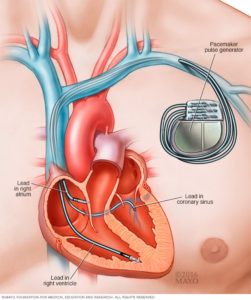

Let’s talk devices. Those could be everything from emergency-room monitors to pacemakers to insulin pumps to X-ray machines whose radiation settings might be changed or overridden by malware. The ICIT report says,

Mobile devices introduce new threat vectors to the organization. Employees and patients expand the attack surface by connecting smartphones, tablets, and computers to the network. Healthcare organizations can address the pervasiveness of mobile devices through an Acceptable Use policy and a Bring-Your-Own-Device policy. Acceptable Use policies govern what data can be accessed on what devices. BYOD policies benefit healthcare organizations by decreasing the cost of infrastructure and by increasing employee productivity. Mobile devices can be corrupted, lost, or stolen. The BYOD policy should address how the information security team will mitigate the risk of compromised devices. One solution is to install software to remotely wipe devices upon command or if they do not reconnect to the network after a fixed period. Another solution is to have mobile devices connect from a secured virtual private network to a virtual environment. The virtual machine should have data loss prevention software that restricts whether data can be accessed or transferred out of the environment.

The Internet of Things – and the increased prevalence of medical devices connected hospital or home networks – increase the risk. What can you do about it? The ICIT report says,

The best mitigation strategy to ensure trust in a network connected to the internet of things, and to mitigate future cyber events in general, begins with knowing what devices are connected to the network, why those devices are connected to the network, and how those devices are individually configured. Otherwise, attackers can conduct old and innovative attacks without the organization’s knowledge by compromising that one insecure system.

Given how common these devices are, keeping IT in the loop may seem impossible — but we must rise to the challenge, ICIT says:

If a cyber network is a castle, then every insecure device with a connection to the internet is a secret passage that the adversary can exploit to infiltrate the network. Security systems are reactive. They have to know about something before they can recognize it. Modern systems already have difficulty preventing intrusion by slight variations of known malware. Most commercial security solutions such as firewalls, IDS/ IPS, and behavioral analytic systems function by monitoring where the attacker could attack the network and protecting those weakened points. The tools cannot protect systems that IT and the information security team are not aware exist.

The home environment – or any use outside the hospital setting – is another huge concern, says the report:

Remote monitoring devices could enable attackers to track the activity and health information of individuals over time. This possibility could impose a chilling effect on some patients. While the effect may lessen over time as remote monitoring technologies become normal, it could alter patient behavior enough to cause alarm and panic.

Pain medicine pumps and other devices that distribute controlled substances are likely high value targets to some attackers. If compromise of a system is as simple as downloading free malware to a USB and plugging the USB into the pump, then average drug addicts can exploit homecare and other vulnerable patients by fooling the monitors. One of the simpler mitigation strategies would be to combine remote monitoring technologies with sensors that aggregate activity data to match a profile of expected user activity.

A major responsibility falls onto the device makers – and the programmers who create the embedded software. For the most part, they are simply not up to the challenge of designing secure devices, and may not have the polices, practices and tools in place to get cybersecurity right. Regrettably, the ICIT report doesn’t go into much detail about the embedded software, but does state,

Unlike cell phones and other trendy technologies, embedded devices require years of research and development; sadly, cybersecurity is a new concept to many healthcare manufacturers and it may be years before the next generation of embedded devices incorporates security into its architecture. In other sectors, if a vulnerability is discovered, then developers rush to create and issue a patch. In the healthcare and embedded device environment, this approach is infeasible. Developers must anticipate what the cyber landscape will look like years in advance if they hope to preempt attacks on their devices. This model is unattainable.

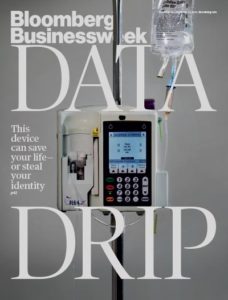

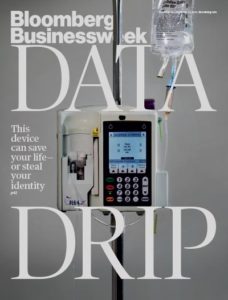

In November 2015, Bloomberg Businessweek published a chilling story, “It’s Way too Easy to Hack the Hospital.” The authors, Monte Reel and Jordon Robertson, wrote about one hacker, Billy Rios:

Shortly after flying home from the Mayo gig, Rios ordered his first device—a Hospira Symbiq infusion pump. He wasn’t targeting that particular manufacturer or model to investigate; he simply happened to find one posted on EBay for about $100. It was an odd feeling, putting it in his online shopping cart. Was buying one of these without some sort of license even legal? he wondered. Is it OK to crack this open?

Infusion pumps can be found in almost every hospital room, usually affixed to a metal stand next to the patient’s bed, automatically delivering intravenous drips, injectable drugs, or other fluids into a patient’s bloodstream. Hospira, a company that was bought by Pfizer this year, is a leading manufacturer of the devices, with several different models on the market. On the company’s website, an article explains that “smart pumps” are designed to improve patient safety by automating intravenous drug delivery, which it says accounts for 56 percent of all medication errors.

Rios connected his pump to a computer network, just as a hospital would, and discovered it was possible to remotely take over the machine and “press” the buttons on the device’s touchscreen, as if someone were standing right in front of it. He found that he could set the machine to dump an entire vial of medication into a patient. A doctor or nurse standing in front of the machine might be able to spot such a manipulation and stop the infusion before the entire vial empties, but a hospital staff member keeping an eye on the pump from a centralized monitoring station wouldn’t notice a thing, he says.

The 97-page ICIT report makes some recommendations, which I heartily agree with.

- With each item connected to the internet of things there is a universe of vulnerabilities. Empirical evidence of aggressive penetration testing before and after a medical device is released to the public must be a manufacturer requirement.

- Ongoing training must be paramount in any responsible healthcare organization. Adversarial initiatives typically start with targeting staff via spear phishing and watering hole attacks. The act of an ill- prepared executive clicking on a malicious link can trigger a hurricane of immediate and long term negative impact on the organization and innocent individuals whose records were exfiltrated or manipulated by bad actors.

- A cybersecurity-centric culture must demand safer devices from manufacturers, privacy adherence by the healthcare sector as a whole and legislation that expedites the path to a more secure and technologically scalable future by policy makers.

This whole thing is scary. The healthcare industry needs to set up its game on cybersecurity.

It’s official: Internet service providers in the United States can continue to sell information about their customers’ Internet usage to marketers — and to anyone else who wants to use it. In 2016, during the Obama administration, the Federal Communications Commission (FCC) tried to require ISPs to get customer permission before using or sharing information about their web browsing. According to the FCC, the rule change, entitled, “Protecting the Privacy of Customers of Broadband and Other Telecommunications Services,” meant:

It’s official: Internet service providers in the United States can continue to sell information about their customers’ Internet usage to marketers — and to anyone else who wants to use it. In 2016, during the Obama administration, the Federal Communications Commission (FCC) tried to require ISPs to get customer permission before using or sharing information about their web browsing. According to the FCC, the rule change, entitled, “Protecting the Privacy of Customers of Broadband and Other Telecommunications Services,” meant:

Can’t we fix injection already? It’s been nearly four years since the most recent iteration of the OWASP Top 10 came out — that’s June 12, 2013. The OWASP Top 10 are the most critical web application security flaws, as determined by a large group of experts. The list doesn’t change much, or change often, because the fundamentals of web application security are consistent.

Can’t we fix injection already? It’s been nearly four years since the most recent iteration of the OWASP Top 10 came out — that’s June 12, 2013. The OWASP Top 10 are the most critical web application security flaws, as determined by a large group of experts. The list doesn’t change much, or change often, because the fundamentals of web application security are consistent. “Call with Alan.” That’s what the calendar event says, with a bridge line as the meeting location. That’s it. For the individual who sent me that invitation, that’s a meaningful description, I guess. For me… worthless! This meeting was apparently sent out (and I agreed to attend) at least three weeks ago. I have no recollection about what this meeting is about. Well, it’ll be an adventure! (Also: If I had to cancel or reschedule, I wouldn’t even know who to contact.)

“Call with Alan.” That’s what the calendar event says, with a bridge line as the meeting location. That’s it. For the individual who sent me that invitation, that’s a meaningful description, I guess. For me… worthless! This meeting was apparently sent out (and I agreed to attend) at least three weeks ago. I have no recollection about what this meeting is about. Well, it’ll be an adventure! (Also: If I had to cancel or reschedule, I wouldn’t even know who to contact.) Wednesday, March 22, spreading from techie to techie. “Better change your iCloud password, and change it fast.” What’s going on?

Wednesday, March 22, spreading from techie to techie. “Better change your iCloud password, and change it fast.” What’s going on?  Let’s talk about the practical application of artificial intelligence to cybersecurity. Or rather, let’s read about it. My friend

Let’s talk about the practical application of artificial intelligence to cybersecurity. Or rather, let’s read about it. My friend  Was the Russian government behind the 2004 theft of data on about 500 million Yahoo subscribers?

Was the Russian government behind the 2004 theft of data on about 500 million Yahoo subscribers?  Let’s take a chainsaw to content-free buzzwords favored by technology marketers and public relations professionals. Or even better, let’s applaud one PR agency’s campaign to do just that.

Let’s take a chainsaw to content-free buzzwords favored by technology marketers and public relations professionals. Or even better, let’s applaud one PR agency’s campaign to do just that.  As many of you know, I am co-founder and part owner of BZ Media LLC. Yes, I’m the “Z” of BZ Media. Here is exciting news released today about one of our flagship events, InterDrone.

As many of you know, I am co-founder and part owner of BZ Media LLC. Yes, I’m the “Z” of BZ Media. Here is exciting news released today about one of our flagship events, InterDrone. To absolutely nobody’s surprise, the U.S. Central Intelligence Agency can spy on mobile phones. That includes Android and iPhone, and also monitor the microphones on smart home devices like televisions.

To absolutely nobody’s surprise, the U.S. Central Intelligence Agency can spy on mobile phones. That includes Android and iPhone, and also monitor the microphones on smart home devices like televisions. What’s the

What’s the  You keep reading the same three names over and over again. Amazon Web Services. Google Cloud Platform. Microsoft Windows Azure. For the past several years, that’s been the top tier, with a wide gap between them and everyone else. Well, there’s a fourth player, the IBM cloud, with their SoftLayer acquisition. But still, it’s AWS in the lead when it comes to Infrastructure-as-a-Service (IaaS) and Platform-as-a-Service (PaaS), with many estimates showing about a 37-40% market share in early 2017. In second place, Azure, at around 28-31%. Third, place, Google at around 16-18%. Fourth place, IBM SoftLayer, at 3-5%.

You keep reading the same three names over and over again. Amazon Web Services. Google Cloud Platform. Microsoft Windows Azure. For the past several years, that’s been the top tier, with a wide gap between them and everyone else. Well, there’s a fourth player, the IBM cloud, with their SoftLayer acquisition. But still, it’s AWS in the lead when it comes to Infrastructure-as-a-Service (IaaS) and Platform-as-a-Service (PaaS), with many estimates showing about a 37-40% market share in early 2017. In second place, Azure, at around 28-31%. Third, place, Google at around 16-18%. Fourth place, IBM SoftLayer, at 3-5%. Modern medical devices increasingly leverage microprocessors and embedded software, as well as sophisticated communications connections, for life-saving functionality. Insulin pumps, for example, rely on a battery, pump mechanism, microprocessor, sensors, and embedded software. Pacemakers and cardiac monitors also contain batteries, sensors, and software. Many devices also have WiFi- or Bluetooth-based communications capabilities. Even hospital rooms with intravenous drug delivery systems are controlled by embedded microprocessors and software, which are frequently connected to the institution’s network. But these innovations also mean that a software defect can cause a critical failure or security vulnerability.

Modern medical devices increasingly leverage microprocessors and embedded software, as well as sophisticated communications connections, for life-saving functionality. Insulin pumps, for example, rely on a battery, pump mechanism, microprocessor, sensors, and embedded software. Pacemakers and cardiac monitors also contain batteries, sensors, and software. Many devices also have WiFi- or Bluetooth-based communications capabilities. Even hospital rooms with intravenous drug delivery systems are controlled by embedded microprocessors and software, which are frequently connected to the institution’s network. But these innovations also mean that a software defect can cause a critical failure or security vulnerability. Think about alarm systems in cars. By default, many automobiles don’t come with an alarm system installed from the factory. That was for three main reasons: It lowered the base sticker price on the car; created a lucrative up-sell opportunity; and allowed for variations on alarms to suit local regulations.

Think about alarm systems in cars. By default, many automobiles don’t come with an alarm system installed from the factory. That was for three main reasons: It lowered the base sticker price on the car; created a lucrative up-sell opportunity; and allowed for variations on alarms to suit local regulations. What’s the biggest tool in the security industry’s toolkit? The patent application. Security thrives on innovation, and always has, because throughout recorded history, the bad guys have always had the good guys at the disadvantage. The only way to respond is to fight back smarter.

What’s the biggest tool in the security industry’s toolkit? The patent application. Security thrives on innovation, and always has, because throughout recorded history, the bad guys have always had the good guys at the disadvantage. The only way to respond is to fight back smarter. Everyone has received those crude emails claiming to be from your bank’s “Secuirty Team” that tells you that you need to click a link to “reset you account password.” It’s pretty easy to spot those emails, with all the misspellings, the terrible formatting, and the bizarre “reply to” email addresses at domains halfway around the world. Other emails of that sort ask you to review an unclothed photo of a A-list celebrity, or open up an attached document that tells you what you’ve won.

Everyone has received those crude emails claiming to be from your bank’s “Secuirty Team” that tells you that you need to click a link to “reset you account password.” It’s pretty easy to spot those emails, with all the misspellings, the terrible formatting, and the bizarre “reply to” email addresses at domains halfway around the world. Other emails of that sort ask you to review an unclothed photo of a A-list celebrity, or open up an attached document that tells you what you’ve won. What’s on the industry’s mind? Security and mobility are front-and-center of the cerebral cortex, as two of the year’s most important events prepare to kick off.

What’s on the industry’s mind? Security and mobility are front-and-center of the cerebral cortex, as two of the year’s most important events prepare to kick off. Want to open up your eyes, expand your horizons, and learn from really smart people? Attend a conference or trade show. Get out there. Meet people. Have conversations. Network. Be inspired by keynotes. Take notes in classes that are delivering great material, and walk out of boring sessions and find something better.

Want to open up your eyes, expand your horizons, and learn from really smart people? Attend a conference or trade show. Get out there. Meet people. Have conversations. Network. Be inspired by keynotes. Take notes in classes that are delivering great material, and walk out of boring sessions and find something better. Las Vegas, January 2017 — “

Las Vegas, January 2017 — “ According to a recent study, 46% of the top one million websites are considered risky. Why? Because the homepage or background ad sites are running software with known vulnerabilities, the site was categorized as a known bad for phishing or malware, or the site had a security incident in the past year.

According to a recent study, 46% of the top one million websites are considered risky. Why? Because the homepage or background ad sites are running software with known vulnerabilities, the site was categorized as a known bad for phishing or malware, or the site had a security incident in the past year. “If you give your security team the work they hate to do day in and day out, you won’t be able to retain that team.”

“If you give your security team the work they hate to do day in and day out, you won’t be able to retain that team.”  I was dismayed this morning to find an email from Pebble — the smart watch folks — essentially announcing their demise. The company is no longer a viable concern, says the message, and the assets of the company are being sold to Fitbit. Some of Pebble’s staff will go to Fitbit as well.

I was dismayed this morning to find an email from Pebble — the smart watch folks — essentially announcing their demise. The company is no longer a viable concern, says the message, and the assets of the company are being sold to Fitbit. Some of Pebble’s staff will go to Fitbit as well. From company-issued tablets to BYOD (bring your own device) smartphones, employees are making the case that mobile devices are essential for productivity, job satisfaction, and competitive advantage. Except in the most regulated industries, phones and tablets are part of the landscape, but their presence requires a strong security focus, especially in the era of non-stop malware, high-profile hacks, and new vulnerabilities found in popular mobile platforms. Here are four specific ways of examining this challenge that can help drive the choice of both policies and technologies for reducing mobile risk.

From company-issued tablets to BYOD (bring your own device) smartphones, employees are making the case that mobile devices are essential for productivity, job satisfaction, and competitive advantage. Except in the most regulated industries, phones and tablets are part of the landscape, but their presence requires a strong security focus, especially in the era of non-stop malware, high-profile hacks, and new vulnerabilities found in popular mobile platforms. Here are four specific ways of examining this challenge that can help drive the choice of both policies and technologies for reducing mobile risk. When an employee account is compromised by malware, the malware establishes a foothold on the user’s computer – and immediately tries to gain access to additional resources. It turns out that with the right data gathering tools, and with the right Big Data analytics and machine-learning methodologies, the anomalous network traffic caused by this activity can be detected – and thwarted.

When an employee account is compromised by malware, the malware establishes a foothold on the user’s computer – and immediately tries to gain access to additional resources. It turns out that with the right data gathering tools, and with the right Big Data analytics and machine-learning methodologies, the anomalous network traffic caused by this activity can be detected – and thwarted. For programmers, a language style guide is essential for helping learn a language’s standards. A style guide also can resolve potential ambiguities in syntax and usage. Interestingly, though, the official

For programmers, a language style guide is essential for helping learn a language’s standards. A style guide also can resolve potential ambiguities in syntax and usage. Interestingly, though, the official

Medical devices are incredibly vulnerable to hacking attacks. In some cases it’s because of software defects that allow for exploits, like buffer overflows, SQL injection or insecure direct object references. In other cases, you can blame misconfigurations, lack of encryption (or weak encryption), non-secure data/control networks, unfettered wireless access, and worse.

Medical devices are incredibly vulnerable to hacking attacks. In some cases it’s because of software defects that allow for exploits, like buffer overflows, SQL injection or insecure direct object references. In other cases, you can blame misconfigurations, lack of encryption (or weak encryption), non-secure data/control networks, unfettered wireless access, and worse. Are you a coder? Architect? Database guru? Network engineer? Mobile developer? User-experience expert? If you have hands-on tech skills, get those hands dirty at a Hackathon.

Are you a coder? Architect? Database guru? Network engineer? Mobile developer? User-experience expert? If you have hands-on tech skills, get those hands dirty at a Hackathon. As Aesop wrote in his short fable, “The Donkey and His Purchaser,” you can quite accurately judge people by the company they keep.

As Aesop wrote in his short fable, “The Donkey and His Purchaser,” you can quite accurately judge people by the company they keep. Can someone steal the data off your old computer? The short answer is yes. A determined criminal can grab the bits, including documents, images, spreadsheets, and even passwords.

Can someone steal the data off your old computer? The short answer is yes. A determined criminal can grab the bits, including documents, images, spreadsheets, and even passwords. What’s it going to mean for Java? When Oracle purchased Sun Microsystems that was one of the biggest questions on the minds of many software developers, and indeed, the entire industry. In an April 2009 blog post, “

What’s it going to mean for Java? When Oracle purchased Sun Microsystems that was one of the biggest questions on the minds of many software developers, and indeed, the entire industry. In an April 2009 blog post, “