There are lots of reasons to use Git as your source-code management system. Whether used as a primary system, or in conjunction with an existing legacy repository, I’m going to argue that if you’re not using Git now, you should be at least testing it out.

There are lots of reasons to use Git as your source-code management system. Whether used as a primary system, or in conjunction with an existing legacy repository, I’m going to argue that if you’re not using Git now, you should be at least testing it out.

Basics of Git: It is open source, and runs on Linux, Unix and Windows servers. It is stable. It is solid. It is fast. It is supported by just about every major tool vendor. Developers love Git. Managers love Git.

Not long ago, much of the world standardized on Concurrent Versions System (CVS) as its version control system. Then Subversion (SVN) came along, and the world standardized on that. Yes, yes, I know there are dozens of other version control systems, ranging from Microsoft’s Visual SourceSafe and Team Foundation Server to IBM Rational’s ClearCase. Those have always been niche products. Some are very successful niche products, but the industry standards have been CVS and SVN for years.

Along came Git, designed by Linus Torvalds in 2005, now headed up by Junio Hamano. For a brief history of Git, read “The Legacy of Linus Torvalds: Linux, Git, and One Giant Flamethrower,” by Robert McMillan, published in Wired in November 2012. For the official history, see the Git website.

What’s so wonderful about Git? I’ll answer in two ways: industry support and impressive functionality.

For industry support, let me refer you to two new articles by SD Times’ Lisa Morgan. Those stories inspired this column. The first is“How to get Git into the enterprise,” and the other is “Git smart about tools: A Buyers Guide.” You’ll see that nearly every major industry player supports Git—even competing SCM systems have worked to ensure interoperability. That’s a heck of an endorsement, and shows the stability and maturity of the platform.

Don’t take my word for it for the impressive functionality. Instead, let me quote from other bloggers.

Tobias Günther: “Work Offline: What if you want to work while you’re on the move? With a centralized VCS like Subversion or CVS, you’re stranded if you’re not connected to the central repository. With Git, almost everything is possible simply on your local machine: make a commit, browse your project’s complete history, merge or create branches… Git lets you decide where and when you want to work.”

Stephen Ball: “Resolving conflicts is way easier (than SVN): In Git, if I have a private branch from a branch that has been updated with new (conflicting) commits, I can rebase its commits one at a time against the public destination branch. I can resolve conflicts as they arise between my code and the current codebase. This makes dealing with conflicts easy because I get the context of the conflict (my commit message) and only see one conflict at a time.

“In SVN if I merge a branch against another and there are a lot of conflicts, there’s nothing I can do but resolve them all at the same time. What a mess.”

Scott Chacon: “There are tons of fantastic and powerful features in Git that help with debugging, complex diffing and merging, and more. There is also a great developer community to tap into and become a part of and a number of really good free resources online to help you learn and use Git…

“I want to share with you the concept that you can think about version control not as a necessary inconvenience that you need to put up with in order to collaborate, but rather as a powerful framework for managing your work separately in contexts, for being able to switch and merge between those contexts quickly and easily, for being able to make decisions late and craft your work without having to pre-plan everything all the time. Git makes all of these things easy and prioritizes them and should change the way you think about how to approach a problem in any of your projects and version control itself.”

Nicola Paolucci: “If you don’t like speed, being productive and more reliable coding practices, then you shouldn’t use Git.”

Peter Cho: “Most developers would be delighted if they can change their workflow to use Git. Switching over early would be more ideal unless, of course, your SCM relies on a large network of dependent applications. If it’s not viable to change SCM systems, I would highly recommend using it on future projects.

“Git is infamous for having a large suite of tools that even seasoned users need months to master. However, getting into the fundamentals of Git is simple if you’re trying to switch over from SVN or CVS. So give a try sometime.”

Thomas Koch: “Somebody probably already recommended you to switch to Git, because it’s the best VCS. I’d like to go a step further now and talk about the risk you’re taking if you won’t switch soon. By still using SVN (if you’re using CVS you’re doomed anyway), you communicate the following: We’re ignorant about the fact that the rest of the (free) world switched to Git. We don’t invest time to train our developers in new technologies. We don’t care to provide the best development infrastructure. We’re not used to collaborate with external contributors. We’re not aware how much Subversion sucks and that Subversion does not support any decent development process. Yes, our development process most certainly sucks too.”

Günther also wrote, “Go With the Flow: Only dead fish swim with the stream. And sometimes, clever developers do, too. Git is used by more and more well-known companies and Open Source projects: Ruby On Rails, jQuery, Perl, Debian, the Linux Kernel, and many more. A large community often is an advantage by itself because an ecosystem evolves around the system. Lots of tutorials, tools (do I have to mention Tower?) and services make Git even more attractive.”

I’m sure there are arguments against Git. Nearly all the ones I’ve heard have come to me via competing source-code management vendors, not from developers who have actually tried Git for more at least one pilot. If you aren’t using Git, check it out. It’s the present and future of version control systems.

Chapter One: Christine Hall

Chapter One: Christine Hall

Open source software (OSS) offers many benefits for organizations large and small—not the least of which is the price tag, which is often zero. Zip. Nada. Free-as-in-beer. Beyond that compelling price tag, what you often get with OSS is a lack of a hidden agenda. You can see the project, you can see the source code, you can see the communications, you can see what’s going on in the support forums.

Open source software (OSS) offers many benefits for organizations large and small—not the least of which is the price tag, which is often zero. Zip. Nada. Free-as-in-beer. Beyond that compelling price tag, what you often get with OSS is a lack of a hidden agenda. You can see the project, you can see the source code, you can see the communications, you can see what’s going on in the support forums. Stupidity. Incompetence. Negligence. The unprecedented huge data breach at Equifax has dominated the news cycle, infuriating IT managers, security experts, legislators, and attorneys — and scaring consumers. It appears that sensitive personally identifiable information (PII) on 143 million Americans was exfiltrated, as well as PII on some non-US nationals.

Stupidity. Incompetence. Negligence. The unprecedented huge data breach at Equifax has dominated the news cycle, infuriating IT managers, security experts, legislators, and attorneys — and scaring consumers. It appears that sensitive personally identifiable information (PII) on 143 million Americans was exfiltrated, as well as PII on some non-US nationals. For programmers, a language style guide is essential for helping learn a language’s standards. A style guide also can resolve potential ambiguities in syntax and usage. Interestingly, though, the official

For programmers, a language style guide is essential for helping learn a language’s standards. A style guide also can resolve potential ambiguities in syntax and usage. Interestingly, though, the official

Be paranoid! When you visit a website for the first time, it can learn a lot about you. If you have cookies on your computer from one of the site’s partners, it can see what else you have been doing. And it can place cookies onto your computer so it can track your future activities.

Be paranoid! When you visit a website for the first time, it can learn a lot about you. If you have cookies on your computer from one of the site’s partners, it can see what else you have been doing. And it can place cookies onto your computer so it can track your future activities. Are you a coder? Architect? Database guru? Network engineer? Mobile developer? User-experience expert? If you have hands-on tech skills, get those hands dirty at a Hackathon.

Are you a coder? Architect? Database guru? Network engineer? Mobile developer? User-experience expert? If you have hands-on tech skills, get those hands dirty at a Hackathon. What’s it going to mean for Java? When Oracle purchased Sun Microsystems that was one of the biggest questions on the minds of many software developers, and indeed, the entire industry. In an April 2009 blog post, “

What’s it going to mean for Java? When Oracle purchased Sun Microsystems that was one of the biggest questions on the minds of many software developers, and indeed, the entire industry. In an April 2009 blog post, “ The newest issue of the second-best software development publication is out – and it’s a doozy. You’ll definitely want to read the July/August 2016 issue of

The newest issue of the second-best software development publication is out – and it’s a doozy. You’ll definitely want to read the July/August 2016 issue of  The MEF recently conducted its second LSO Hackathon at a Rome event called Euro16. You can read my story about it here in DiarioTi:

The MEF recently conducted its second LSO Hackathon at a Rome event called Euro16. You can read my story about it here in DiarioTi:  Today’s serendipitous discovery: A blog post about the Enterprise Software Development Conference (ESC), produced by

Today’s serendipitous discovery: A blog post about the Enterprise Software Development Conference (ESC), produced by  Fire up the

Fire up the  A hackathon – like the debut

A hackathon – like the debut  SEYTON

SEYTON I like this new Microsoft. Satya Nadella’s Microsoft. Yes, the CEO needs to improve his public speaking skills,

I like this new Microsoft. Satya Nadella’s Microsoft. Yes, the CEO needs to improve his public speaking skills,  Malicious agents can crash a website by implementing a DDoS—a Distributed Denial of Service Attack—against a server. So can sloppy programmers.

Malicious agents can crash a website by implementing a DDoS—a Distributed Denial of Service Attack—against a server. So can sloppy programmers. You’ve gotta read “

You’ve gotta read “ Where do your employees go to find shared data? If it’s external data, probably an external search engine, like Google (

Where do your employees go to find shared data? If it’s external data, probably an external search engine, like Google ( Microsoft has evolved considerably. It’s moved from its early days selling developer tools, or its era focusing on Windows and Office, or its run as a server software maker, or its first iteration as a cloud/online services company. Despite all the myriad changes, it’s always been true that Microsoft does not excel at innovation.

Microsoft has evolved considerably. It’s moved from its early days selling developer tools, or its era focusing on Windows and Office, or its run as a server software maker, or its first iteration as a cloud/online services company. Despite all the myriad changes, it’s always been true that Microsoft does not excel at innovation. If your developers aren’t enrolled in developer relations programs, they will grow old and stale. They will become moldy. They will pine for the Good Old Days and opine endlessly about the irrelevance of new tools, new platforms, new paradigms and new ideas. No matter their brilliance today, they will become obsolescent.

If your developers aren’t enrolled in developer relations programs, they will grow old and stale. They will become moldy. They will pine for the Good Old Days and opine endlessly about the irrelevance of new tools, new platforms, new paradigms and new ideas. No matter their brilliance today, they will become obsolescent. GOOGLE I/O 2004, SAN FRANCISCO — What is Android? It’s hard to know these days, and I’m not sure if that’s good or not. We all know what happened when Microsoft began seeing Windows as a common operating system for everything from embedded systems to desktops to phones to servers. By trying to be reasonably good at everything, Windows lost its way and ceased being the best platform for anything.

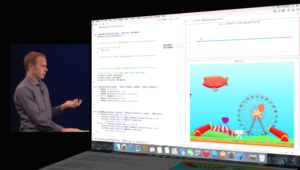

GOOGLE I/O 2004, SAN FRANCISCO — What is Android? It’s hard to know these days, and I’m not sure if that’s good or not. We all know what happened when Microsoft began seeing Windows as a common operating system for everything from embedded systems to desktops to phones to servers. By trying to be reasonably good at everything, Windows lost its way and ceased being the best platform for anything. SAN FRANCISCO — I expected a new version of OS X, the operating system for Mac desktops and notebooks. I expected a new version of iOS, the operating system for iPhones and iPads. I did not expect a new programming language. Yet that’s what we got at Apple’s

SAN FRANCISCO — I expected a new version of OS X, the operating system for Mac desktops and notebooks. I expected a new version of iOS, the operating system for iPhones and iPads. I did not expect a new programming language. Yet that’s what we got at Apple’s  There are lots of reasons to use

There are lots of reasons to use  South San Francisco, California — Writing software would be oh, so much simpler if we didn’t have all those darned choices. HTML5 or native apps? Windows Server in the data center or Windows Azure in the cloud? Which Linux distro? Java or C#? Continuous Integration? Continuous Delivery? Git or Subversion or both? NoSQL? Which APIs? Node.js? Follow-the-sun?

South San Francisco, California — Writing software would be oh, so much simpler if we didn’t have all those darned choices. HTML5 or native apps? Windows Server in the data center or Windows Azure in the cloud? Which Linux distro? Java or C#? Continuous Integration? Continuous Delivery? Git or Subversion or both? NoSQL? Which APIs? Node.js? Follow-the-sun? “I tried working for some tech companies like Microsoft, Tektronix, IBM, and Intel. What a fiasco. I can’t count how many young men with way less experience and skills than me snagged the good fun hands-on tech jobs, while I got stuck doing some kind of crap customer service job. I still remember this guy who got hired as a desktop technician. He was in his 30s, but in bad health, always red and sweaty and breathing hard. It took him forever to do the simplest task, like connecting a monitor or printer. He didn’t know much and was usually wrong, but he kept his job. I busted my butt to show I was serious and already had a good skill set, and would work my tail off to excel, and they couldn’t see past that I wasn’t male. So I got the message, mentally told them to eff off and stuck with freelancing.”

“I tried working for some tech companies like Microsoft, Tektronix, IBM, and Intel. What a fiasco. I can’t count how many young men with way less experience and skills than me snagged the good fun hands-on tech jobs, while I got stuck doing some kind of crap customer service job. I still remember this guy who got hired as a desktop technician. He was in his 30s, but in bad health, always red and sweaty and breathing hard. It took him forever to do the simplest task, like connecting a monitor or printer. He didn’t know much and was usually wrong, but he kept his job. I busted my butt to show I was serious and already had a good skill set, and would work my tail off to excel, and they couldn’t see past that I wasn’t male. So I got the message, mentally told them to eff off and stuck with freelancing.”