We had a good show this morning! Enjoy these photographs, taken with a Canon EOS 1D Mk IV with a 500mm prime lens. The first image was cropped, and the last one had its exposure boosted in post-processing by 4 stops. Otherwise, these are untouched.

We had a good show this morning! Enjoy these photographs, taken with a Canon EOS 1D Mk IV with a 500mm prime lens. The first image was cropped, and the last one had its exposure boosted in post-processing by 4 stops. Otherwise, these are untouched.

Nobody wants bad guys to be able to hack connected cars. Equally importantly, they shouldn’t be able to hack any part of the multi-step communications path that lead from the connected car to the Internet to cloud services – and back again. Fortunately, companies are working across the automotive and security industries to make sure that does happen.

Nobody wants bad guys to be able to hack connected cars. Equally importantly, they shouldn’t be able to hack any part of the multi-step communications path that lead from the connected car to the Internet to cloud services – and back again. Fortunately, companies are working across the automotive and security industries to make sure that does happen.

The consequences of cyberattacks against cars range from the bad to the horrific: Hackers might be able to determine that a driver is not home, and sell that information to robbers. Hackers could access accounts and passwords, and be able to leverage that information for identity theft, or steal information from bank accounts. Hackers might be able to immobilize vehicles, or modify/degrade the functionality of key safety features like brakes or steering. Hackers might even be able to seize control of the vehicle, and cause accidents or terrorist incidents.

Horrific. Thankfully, companies like semiconductor leader Micron Technology, along with communication security experts NetFoundry, have a plan – and are partnering with vehicle manufacturers to embed secure, trustworthy hardware into connected cars. The result: Safety. Security. Trust. Vroom.

The IoT consists of autonomous computing units, connected to back-end services via the Internet. Those back-end services are often in the cloud, and in the case of connected cars, might offer anything from navigation to infotainment to preventive maintenance to firmware upgrades for build-in automotive features. Often, the back-end services would be offered through the automobile’s manufacturer, though they may be provisioned through third-party providers.

The communications chain for connected cars is lengthy. On the car side, it begins with an embedded component (think stereo head unit, predictive front-facing radar used for adaptive cruise control, or anti-lock brake monitoring system). The component will likely contain or be connected to a ECU – an embedded control unit, a circuit board with a microprocessor, firmware, RAM, and a network connection. The ECU, in turn, is connected via an in-vehicle network, which connected to a communications gateway.

That communications gateway talks to a telecommunications provider, which could change as the vehicle crosses service provider or national boundaries. The telco links to the Internet, the Internet links to a cloud provider (such as Amazon Web Services), and from there, there are services that talk to the automotive systems.

Trust is required at all stages of the communications. The vehicle must be certain that its embedded devices, ECUs, and firmware are not corrupted or hacked. The gateway needs to know that it’s talking to the real car and its embedded systems – not fakes or duplicates offered by hackers. It also needs to know that the cloud services are the genuine article, and not fakes. And of course, the cloud services must be assured that they are talking to the real, authenticated automotive gateway and in-vehicle components.

Read more about this in my feature for Business Continuity, “Building Cybertrust into the Connected Car.”

A sad footnote to this blog post. Our faithful Prius, pictured above, was totaled in a collision. Nobody was injured, which is the most important, but the car is gone. May it rust in piece.

We all have heard the usual bold predictions for technology in 2018: Lots of cloud computing, self-driving cars, digital cryptocurrencies, 200-inch flat-screen televisions, and versions of Amazon’s Alexa smart speaker everywhere on the planet. Those types of predictions, however, are low-hanging fruit. They’re not bold. One might as well predict that there will be some sunshine, some rainy days, a big cyber-bank heist, and at least one smartphone catching fire.

We all have heard the usual bold predictions for technology in 2018: Lots of cloud computing, self-driving cars, digital cryptocurrencies, 200-inch flat-screen televisions, and versions of Amazon’s Alexa smart speaker everywhere on the planet. Those types of predictions, however, are low-hanging fruit. They’re not bold. One might as well predict that there will be some sunshine, some rainy days, a big cyber-bank heist, and at least one smartphone catching fire.

Let’s dig for insights beyond the blindingly obvious. I talked to several tech leaders, deep-thinking individuals in California’s Silicon Valley, asking them for their predictions, their idea of new trends, and disruptions in the tech industry. Let’s see what caught their eye.

Gary Singh, VP of marketing, OnDot Systems, believes that 2018 will be the year when mobile banking will transform into digital banking — which is more disruptive than one would expect. “The difference between digital and mobile banking is that mobile banking is informational. You get information about your accounts,” he said. Singh continues, “But in terms of digital banking, it’s really about actionable insights, about how do you basically use your funds in the most appropriate way to get the best value for your dollar or your pound in terms of how you want to use your monies. So that’s one big shift that we would see start to happen from mobile to digital.”

Tom Burns, Vice President and General Manager of Dell EMC Networking, has been following Software-Defined Wide Area Networks. SD-WAN is a technology that allows enterprise WANs to thrive over the public Internet, replacing expensive fixed-point connections provisioned by carriers using technologies like MPLS. “The traditional way of connecting branches in office buildings and providing services to those particular branches is going to change,” Burns observed. “If you look at the traditional router, a proprietary architecture, dedicated lines. SD-WAN is offering a much lower cost but same level of service opportunity for customers to have that data center interconnectivity or branch connectivity providing some of the services, maybe a full even office in the box, but security services, segmentation services, at a much lower cost basis.”

NetFoundry’s co-founder, Mike Hallett, sees a bright future for Application Specific Networks, which link applications directly to cloud or data center applications. The focus is on the application, not on the device. “For 2018, when you think of the enterprise and the way they have to be more agile, flexible and faster to move to markets, particularly going from what I would call channel marketing to, say, direct marketing, they are going to need application-specific networking technologies.” Hallett explains that Application Specific Networks offer the ability to be able to connect from an application, from a cloud, from a device, from a thing, to any application or other device or thing quickly and with agility. Indeed, those connections, which are created using software, not hardware, could be created “within minutes, not within the weeks or months it might take, to bring up a very specific private network, being able to do that. So the year of 2018 will see enterprises move towards software-only networking.”

Mansour Karam, CEO and founder of Apstra, also sees software taking over the network. “I really see massive software-driven automation as a major trend. We saw technologies like intent-based networking emerge in 2017, and in 2018, they’re going to go mainstream,” he said.

There are predictions around open networking, augmented reality, artificial intelligence – and more. See my full story in Upgrade Magazine, “From SD-WAN to automation to white-box switching: Five tech predictions for 2018.”

Tom Burns, VP and General Manager of Dell EMC Networking, doesn’t want 2018 to be like 2017. Frankly, none of us in tech want to hit the “repeat” button either. And we won’t, not with increased adoption of blockchain, machine learning/deep learning, security-as-a-service, software-defined everything, and critical enterprise traffic over the public Internet.

Tom Burns, VP and General Manager of Dell EMC Networking, doesn’t want 2018 to be like 2017. Frankly, none of us in tech want to hit the “repeat” button either. And we won’t, not with increased adoption of blockchain, machine learning/deep learning, security-as-a-service, software-defined everything, and critical enterprise traffic over the public Internet.

Of course, not all possible trends are positive ones. Everyone should prepare for more ransomware, more dangerous data breaches, newly discovered flaws in microprocessors and operating systems, lawsuits over GDPR, and political attacks on Net Neutrality. Yet, as the tech industry embraces 5G wireless and practical applications of the Internet of Things, let’s be optimistic, and hope that innovation outweighs the downsides of fast-moving technology.

Here, Dell has become a major force in networking across the globe. The company’s platform, known as Dell EMC Open Networking, includes a portfolio of data center switches and software, as well as solutions for campus and branch networks. Plus, Dell offers end-to-end services for digital transformation, training, and multivendor environment support.

Tom Burns heads up Dell’s networking business. That business became even larger in September 2106, which Dell closed on its US$67 billion acquisition of EMC Corp. Before joining Dell in 2012, Burns was a senior executive at Alcatel-Lucent for many years. He and I chatted in early January at one of Dell’s offices in Santa Clara, Calif.

Q: What’s the biggest tech trend from 2017 that you see continuing into 2018?

Tom Burns (TB): The trend that I think that will continue into 2018 and even beyond is around digital transformation. And I recognize that everyone may have a different definition of what that means, but what we at Dell Technologies believe it means is that the number of connected devices exploding, whether it be cell phones or RFIDs or intelligent type of devices that are looking at our factories and so forth.

And all of this information needs to be collected and analyzed, with what some call artificial intelligence. Some of it needs to be aggregated at the edge. Some of it’s going to be brought back to the core data centers. This is what we infer to as IT transformation, to enable workforce transformation and other capabilities to deliver the applications, the information, the video, the voice communications, in real time to the users and give them the intelligence from the information that’s being gathered to make real-time decisions or whatever they need the information for.

Q: What do you see as being the tech trend from 2017 that you hope won’t continue into 2018?

TB: The trend that won’t continue into 2018 is the old buying habits around previous-generation technology. CIOs and CEOs, whether in enterprises or in service providers, are going to have to think of a new way to deliver their services and applications on a real-time basis, and the traditional architectures that have driven our data centers over the years just is not going to work anymore. It’s not scalable. It’s not flexible. It doesn’t drive out the costs that are necessary in order to enable those new applications.

So one of the things that I think is going to stop in 2018 is the old way of thinking – proprietary applications, proprietary full stacks. I think disaggregation, open, is going to be adopted much, much faster.

Q: If you could name one thing that will predict how the tech industry will do business next year, what do you think it will be?

TB: Well, I think one of the major changes, and we’ve started to see it already, and in fact, Dell Technologies announced it about a year ago, is how is our technology being consumed? We’ve been, let’s face it, box sellers or even solution providers that look at it from a CapEx standpoint. We go in, talk to our customers, we help them enable a new application as a service, and we kind of walk away. We sell them the product, and then obviously we support the product.

More and more, I think the customers and the consumers are looking for different ways to consume that technology, so we’ve started things like consumption models like pay as you grow, pay as you turn on, consumption models that allows us to basically ignite new services on demand. We have some several customers that are doing this, particularly around the service provider area. So I think one way tech companies are going to change on how they deliver is this whole thing around pay as a service, consumption models and a new way to really provide the technology capabilities to our customers and then how do they enable them.

Q: If you could predict one thing that will change how enterprise customers do business next year…?

TB: One that we see as a huge, tremendous impact on how customers are going to operate is SD-WAN. The traditional way of connecting branches and office buildings and providing services to those particular branches is going to change. If you look at the traditional router, a proprietary architecture, dedicated lines, SD-WAN is offering a much lower cost but same level of service opportunity for customers to have that data center interconnectivity or branch connectivity, providing some of the services, maybe a full even office in the box, but security services, segmentation services, at a much lower cost basis. So I think that one of the major changes for enterprises next year and service providers is going to be this whole concept and idea with real technology behind it around Software-Defined WAN.

There’s a lot more to my conversation with Tom Burns. Read the entire interview at Upgrade Magazine.

From January 1, 2005 through December 27, 2017, the Identity Theft Resource Center (ITRC) reported 8,190 breaches, with 1,057,771,011 records exposed. That’s more than a billion records. Billion with a B. That’s not a problem. That’s an epidemic.

From January 1, 2005 through December 27, 2017, the Identity Theft Resource Center (ITRC) reported 8,190 breaches, with 1,057,771,011 records exposed. That’s more than a billion records. Billion with a B. That’s not a problem. That’s an epidemic.

That horrendous number compiles data breaches in the United States confirmed by media sources or government agencies. Breaches may have exposed information that could potentially lead to identity theft, including Social Security numbers, financial account information, medical information, and even email addresses and passwords.

Of course, some people may be included on multiple breaches, and given today’s highly interconnected world, that’s probably very likely. There’s no good way to know how many individuals were affected.

What defines a breach? The organization says,

Security breaches can be broken down into a number of additional sub-categories by what happened and what information (data) was exposed. What they all have in common is they usually contain personal identifying information (PII) in a format easily read by thieves, in other words, not encrypted.

The ITRC tracks seven categories of breaches:

As we’ve seen, data loss has occurred when employees store data files on a cloud service without encryption, without passwords, without access controls. It’s like leaving a luxury car unlocked, windows down, keys on the seat: If someone sees this and steals the car, it’s theft – but it was easily preventable theft abetted by negligence.

The rate of breaches is increasing, says the ITRC. The number of U.S. data breach incidents tracked in 2017 hit a record high of 1,579 breaches exposing 178,955,069 records. This is a 44.7% increase over the record high figures reported for 2016, says the ITRC.

It’s mostly but not entirely about hacking. The ITRC says in its “2017 Annual Data Breach Year-End Review,”

Hacking continues to rank highest in the type of attack, at 59.4% of the breaches, an increase of 3.2 percent over 2016 figures: Of the 940 breaches attributed to hacking, 21.4% involved phishing and 12.4% involved ransomware/malware.

In addition,

Nearly 20% of breaches included credit and debit card information, a nearly 6% increase from last year. The actual number of records included in these breaches grew by a dramatic 88% over the figures we reported in 2016. Despite efforts from all stakeholders to lessen the value of compromised credit/debit credentials, this information continues to be attractive and lucrative to thieves and hackers.

Data theft truly is becoming epidemic. And it’s getting worse.

Hello, Terry, whatever your email address is. I don’t think you really have a job offer for me. For one thing, if you really connected via LinkedIn, you’d have messaged me through the service. For another, email addresses often match names. For another… well, that’s enough.

Hello, Terry, whatever your email address is. I don’t think you really have a job offer for me. For one thing, if you really connected via LinkedIn, you’d have messaged me through the service. For another, email addresses often match names. For another… well, that’s enough.

Don’t reply to messages like this. Just delete them.

From: Terry Kaneko email hidden; JavaScript is required

Subject: Position Detail

Date: January 24, 2018 at 4:04:18 PM MST

Reply-To: email hidden; JavaScript is requiredHello

How are you doing today is nice connect you on Linkedin i think you

are fit for a job offer and i will like to share with you.Regard

Terry Kaneko.

The pattern of cloud adoption moves something like the ketchup bottle effect: You tip the bottle and nothing comes out, so you shake the bottle and suddenly you have ketchup all over your plate.

The pattern of cloud adoption moves something like the ketchup bottle effect: You tip the bottle and nothing comes out, so you shake the bottle and suddenly you have ketchup all over your plate.

That’s a great visual from Frank Munz, software architect and cloud evangelist at Munz & More, in Germany. Munz and a few other leaders in the Oracle community were interviewed on a podcast by Bob Rhubart, Architect Community Manager at Oracle, about the most important trends they saw in 2017. The responses covered a wide range of topics, from cloud to blockchain, from serverless to machine learning and deep learning.

During the 44-minute session, “What’s Hot? Tech Trends That Made a Real Difference in 2017,” the panel took some fascinating detours into the future of self-programming computers and the best uses of container technologies like Kubernetes. For those, you’ll need to listen to the podcast.

The panel included: Frank Munz; Lonneke Dikmans, chief product officer of eProseed, Netherlands; Lucas Jellema, CTO, AMIS Services, Netherlands; Pratik Patel, CTO, Triplingo, US; and Chris Richardson, founder and CEO, Eventuate, US. The program was recorded in San Francisco at Oracle OpenWorld and JavaOne.

The ketchup quip reflects the cloud passing a tipping point of adoption in 2017. “For the first time in 2017, I worked on projects where large, multinational companies give up their own data center and move 100% to the cloud,” Munz said. These workload shifts are far from a rarity. As Dikmans said, the cloud drove the biggest change and challenge: “[The cloud] changes how we interact with customers, and with software. It’s convenient at times, and difficult at others.”

Security offered another way of looking at this tipping point. “Until recently, organizations had the impression that in the cloud, things were less secure and less well managed, in general, than they could do themselves,” said Jellema. Now, “people have come to realize that they’re not particularly good at specific IT tasks, because it’s not their core business.” They see that cloud vendors, whose core business is managing that type of IT, can often do those tasks better.

In 2017, the idea of shifting workloads en masse to the cloud and decommissioning data centers became mainstream and far less controversial.

But wait, there’s more! See about Blockchain, serverless computing, and pay-as-you-go machine learning, in my essay published in Forbes, “Tech Trends That Made A Real Difference In 2017.”

“The functional style of programming is very charming,” insists Venkat Subramaniam. “The code begins to read like the problem statement. We can relate to what the code is doing and we can quickly understand it.” Not only that, Subramaniam explains in his keynote address for Oracle Code Online, but as implemented in Java 8 and beyond, functional-style code is lazy—and that laziness makes for efficient operations because the runtime isn’t doing unnecessary work.

“The functional style of programming is very charming,” insists Venkat Subramaniam. “The code begins to read like the problem statement. We can relate to what the code is doing and we can quickly understand it.” Not only that, Subramaniam explains in his keynote address for Oracle Code Online, but as implemented in Java 8 and beyond, functional-style code is lazy—and that laziness makes for efficient operations because the runtime isn’t doing unnecessary work.

Subramaniam, president of Agile Developer and an instructional professor at the University of Houston, believes that laziness is the secret to success, both in life and in programming. Pretend that your boss tells you on January 10 that a certain hourlong task must be done before April 15. A go-getter might do that task by January 11.

That’s wrong, insists Subramaniam. Don’t complete that task until April 14. Why? Because the results of the boss’s task aren’t needed yet, and the requirements may change before the deadline, or the task might be canceled altogether. Or you might even leave the job on March 13. This same mindset should apply to your programming: “Efficiency often means not doing unnecessary work.”

Subramaniam received the JavaOne RockStar award three years in a row and was inducted into the Java Champions program in 2013 for his efforts in motivating and inspiring software developers around the world. In his Oracle Code Online keynote, he explored how functional-style programming is implemented in the latest versions of Java, and why he’s so enthusiastic about using this style for applications that process lots and lots of data—and where it’s important to create code that is easy to read, easy to modify, and easy to test.

The old mainstream of imperative programming, which has been a part of the Java language from day one, relies upon developers to explicitly code not only what they want the program to do, but also how to do it. Take software that has a huge amount of data to process; the programmer would normally create a loop that examines each piece of data, and if appropriate, take specific action on that data with each iteration of the loop. It’s up to the developer to create the loop and manage it—in addition to coding the business logic to be performed on the data.

The imperative model, argues Subramaniam, results in what he calls “accidental complexity”—each line of code might perform multiple functions, which makes it hard to understand, modify, test, and verify. And, the developer must do a lot of work to set up and manage the data and iterations. “You get bogged down with the details,” he said. This not only introduces complexity, but makes code hard to change.”

By contrast, when using a functional style of programming, developers can focus almost entirely on what is to be done, while ignoring the how. The how is handled by the underlying library of functions, which are defined separately and applied to the data as required. Subramaniam says that functional-style programming provides highly expressive code, where each line of code does only one thing: “The code becomes easier to work with, and easier to write.”

Subramaniam adds that in functional-style programming, “The code becomes the business logic.” Read more in my essay published in Forbes, “Lazy Java Code Makes Applications Elegant, Sophisticated — And Efficient at Runtime.”

At least, I think it’s Swedish! Just stumbled across this. I hope they bought the foreign rights to one of my articles…

With lots of inexpensive, abundant computation resources available, nearly anything becomes possible. For example, you can process a lot of network data to identify patterns, identify intelligence, and product insight that can be used to automate networks. The road to Intent-Based Networking Systems (IBNS) and Application-Specific Networks (ASN) is a journey. That’s the belief of Rajesh Ghai, Research Director of Telecom and Carrier IP Networks at IDC.

With lots of inexpensive, abundant computation resources available, nearly anything becomes possible. For example, you can process a lot of network data to identify patterns, identify intelligence, and product insight that can be used to automate networks. The road to Intent-Based Networking Systems (IBNS) and Application-Specific Networks (ASN) is a journey. That’s the belief of Rajesh Ghai, Research Director of Telecom and Carrier IP Networks at IDC.

Ghai defines IBNS as a closed-loop continuous implementation of several steps:

Related to that concept, Ghai explains, is ASN. “It’s also a concept which is software control and optimization and automation. The only difference is that ASN is more applicable to distributed applications over the internet than IBNS.”

“Think of intent-based networking as software that sits on top of your infrastructure and focusing on the networking infrastructure, and enables you to operate your network infrastructure as one system, as opposed to box per box,” explained Mansour Karam, Founder, CEO of Apstra, which offers IBNS solutions for enterprise data centers.

“To achieve this, we have to start with intent,” he continued. “Intent is both the high-level business outcomes that are required by the business, but then also we think of intent as applying to every one of those stages. You may have some requirements in how you want to build.”

Karam added, “Validation includes tests that you would run — we call them expectations — to validate that your network indeed is behaving as you expected, as per intent. So we have to think of a sliding scale of intent and then we also have to collect all the telemetry in order to close the loop and continuously validate that the network does what you want it to do. There is the notion of state at the core of an IBNS that really boils down to managing state at scale and representing it in a way that you can reason about the state of your system, compare it with the desired state and making the right adjustments if you need to.”

The upshot of IBNS, Karam said: If you have powerful automation you’re taking the human out of the equation, and so you get a much more agile network. You can recoup the revenues that otherwise you would have lost, because you’re unable to deliver your business services on time. You will reduce your outages massively, because 80% of outages are caused by human error. You reduce your operational expenses massively, because organizations spend $4 operating every dollar of CapEx, and 80% of it is manual operations. So if you take that out you should be able to recoup easily your entire CapEx spend on IBNS.”

“Application-Specific Networks, like Intent-Based Networking Systems, enable digital transformation, agility, speed, and automation,” explained Galeal Zino, Founder of NetFoundry, which offers an ASN platform.

He continued, “ASN is a new term, so I’ll start with a simple analogy. ASNs are like are private clubs — very, very exclusive private clubs — with exactly two members, the application and the network. ASN literally gives each application its own network, one that’s purpose-built and driven by the specific needs of that application. ASN merges the application world and the network world into software which can enable digital transformation with velocity, with scale, and with automation.”

Read more in my new article for Upgrade Magazine, “Manage smarter, more autonomous networks with Intent-Based Networking Systems and Application Specific Networking.”

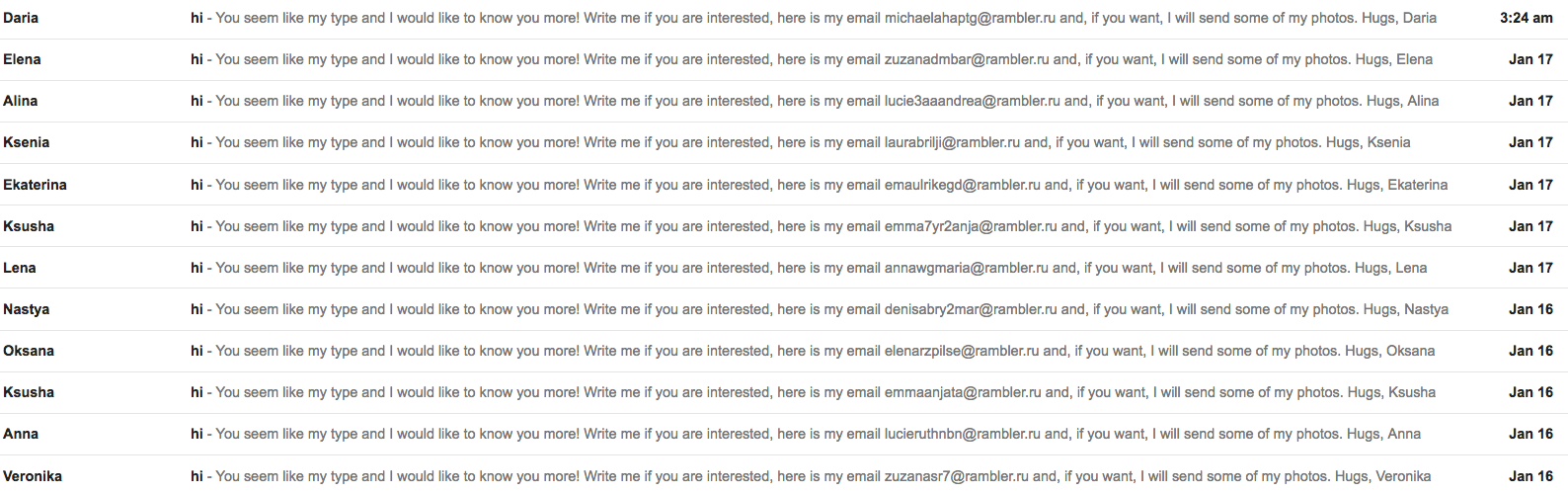

So many women in Russia are vying for my attention… and all of them, from Alina to Veronika, are using the exact same language. Needless to say, this is a scam.

The scammers try too hard. One message might have gotten someone’s attention – but receiving so many nearly-identical versions should set off alarm bells.

All the messages in this series requested a response to a unique email address at rambler.ru. Despite appearances, the messages were sent from several different domains. For example, the one from Daria appeared to be sent from the optimaledition.es domain in Spain. No telling where it really came from, though; the headers weren’t definitive.

Don’t respond to messages like this. Delete them.

Subj: Hi

You seem like my type and I would like to know you more! Write me if you are interested, here is my email email hidden; JavaScript is required and, if you want, I will send some of my photos. Hugs, Daria

When the little wireless speaker in your kitchen acts on your request to add chocolate milk to your shopping list, there’s artificial intelligence (AI) working in the cloud, to understand your speech, determine what you want to do, and carry out the instruction.

When the little wireless speaker in your kitchen acts on your request to add chocolate milk to your shopping list, there’s artificial intelligence (AI) working in the cloud, to understand your speech, determine what you want to do, and carry out the instruction.

When you send a text message to your HR department explaining that you woke up with a vision-blurring migraine, an AI-powered chatbot knows how to update your status to “out of the office” and notify your manager about the sick day.

When hackers attempt to systematically break into the corporate computer network over a period of weeks, AI sees the subtle patterns in historical log data, recognizes outliers in the packet traffic, raises the alarm, and recommends appropriate countermeasures.

AI is nearly everywhere in today’s society. Sometimes it’s fairly obvious (as with a chatbot), and sometimes AI is hidden under the covers (as with network security monitors). It’s a virtuous cycle: Modern cloud computing and algorithms make AI a fast, efficient, and inexpensive approach to problem-solving. Developers discover those cloud services and algorithms and imagine new ways to incorporate the latest AI functionality into their software. Businesses see the value of those advances (even if they don’t know that AI is involved), and everyone benefits. And quickly, the next wave of emerging technology accelerates the cycle again.

AI can improve the user experience, such as when deciphering spoken or written communications, or inferring actions based on patterns of past behavior. AI techniques are excellent at pattern-matching, making it easier for machines to accurately decipher human languages using context. One characteristic of several AI algorithms is flexibility in handling imprecise data: Human text. Specially, chatbots—where humans can type messages on their phones, and AI-driven software can understand what they say and carry on a conversation, providing desired information or taking the appropriate actions.

If you think AI is everywhere today, expect more tomorrow. AI-enhanced software-as-a-service and platform-as-a-service products will continue to incorporate additional AI to help make cloud-delivered and on-prem services more reliable, more performant, and more secure. AI-driven chatbots will find their ways into new, innovative applications, and speech-based systems will continue to get smarter. AI will handle larger and larger datasets and find its way into increasingly diverse industries.

Sometimes you’ll see the AI and know that you’re talking to a bot. Sometimes the AI will be totally hidden, as you marvel at the, well, uncanny intelligence of the software, websites, and even the Internet of Things. If you don’t believe me, ask a chatbot.

Read more in my feature article in the January/February 2018 edition of Oracle Magazine, “It’s Pervasive: AI Is Everywhere.”

“Proudly not pay-to-play, we invite all of this years’ recipients to share their success through a complementary digital certificate and copyright to the title.” So claims the award letter.

We received this email to our corporate info@ address last week. Can you smell the scam? Interestingly, the signature block is a graphic, probably to elude spam filters. The company is AI Global Media, who did not reply when we wrote to “Chelsea” with questions. But then again, given they “spent recent months examining the achievements of thousands of business leaders and looking at the contributions they have made to their companies, as well as their accomplishments over the course of the previous year,” and picked as their sole Arizona winner a small tech consulting firm which serves about a dozen clients…. what would you expect?

From: Chelsea Dytham

Subj: Carole, you are our Female CEO of the Year 2018 – Arizona

Hello Carole,

Your hard work and innovative approach has been recognised! We are delighted to announce that you are a recipient within AI’s annual CEO of the Year Awards. You have been awarded the title of:

Female CEO of the Year 2018 – Arizona

The CEO awards were founded to identify and recognise the outstanding leadership of CEOs across all industries and jurisdictions and will be commending just one CEO from each region and each sector. We have spent recent months examining the achievements of thousands of business leaders and looking at the contributions they have made to their companies, as well as their accomplishments over the course of the previous year.

In terms of determining those most deserving of this coveted accolade, we take into consideration significant achievements from the past calendar year, other accolades won, length of service, company performance since you took position at the helm (or since day one for those who have founded their businesses), as well as client testimonials and recommendations.

Proudly not pay-to-play, we invite all of this years’ recipients to share their success through a complementary digital certificate and copyright to the title.

If, on the other hand, you would like to really capitalise on this good news and reach more than 108,500 industry peers and potential clients, we have three packages for your consideration.

The Exclusive Package – 1,595 GBP

- Supporting image and headline on the front cover

- A 4-page editorial inclusion and Camden Associates will be the first company profiled

- Your inclusion replicated on the homepage of our website

- Your inclusion in the monthly newsletter, for 3 months

- A 6-month web banner

- 3 bespoke crystal trophies

- A personalised digital logo for use in your email signature/website

- High-resolution PDF copies of the inclusion

The Principal Package – 895 GBP

- A double-page inclusion in the first 20 pages

- Your inclusion in the monthly newsletter, for 1 month

- A 3-month web banner

- 2 bespoke crystal trophies

- A personalised digital logo for use in your email signature/website

- High-resolution PDF copies of the inclusion

The Foundation Package – 395 GBP

- A single-page inclusion in the magazine

- 1 bespoke crystal trophy

- A personalised digital logo for use in your email signature/website

Please note that P&P and (where applicable) VAT is charged in addition.

NB: The main front cover image is also currently available to purchase on a first come first served basis at a further price of 2,200 GBP. If this may be of interest please do let me know.

We have an entirely in-house editorial and design team who will assist you in putting together all items associated with your package, making the process as convenient for you as possible. Trophies and logos are available to purchase separately on request also.

If you would like to move forward, simply respond confirming your chosen package and its associated price, for example ‘Agreed, The Principal Package – 895 GBP’. Once I have received this I will forward your information over to our editorial team who will begin the creative process with you.

Should you have any questions or require more information regarding this programme, the packages, or the magazine in general, please don’t hesitate to get in touch and I will be more than happy to help.

I look forward to receiving your response, Carole.

Kind regards,

Chelsea Dytham

Millions of developers are using Artificial Intelligence (AI) or Machine Learning (ML) in their projects, says Evans Data Corp. Evans’ latest Global Development and Demographics Study, released in January 2018, says that 29% of developers worldwide, or 6,452,000 in all, are currently using some form of AI or ML. What’s more, says the study, an additional 5.8 million expect to use AI or ML within the next six months.

Millions of developers are using Artificial Intelligence (AI) or Machine Learning (ML) in their projects, says Evans Data Corp. Evans’ latest Global Development and Demographics Study, released in January 2018, says that 29% of developers worldwide, or 6,452,000 in all, are currently using some form of AI or ML. What’s more, says the study, an additional 5.8 million expect to use AI or ML within the next six months.

ML is actually a subset of AI. To quote expertsystem.com,

In practice, artificial intelligence – also simply defined as AI – has come to represent the broad category of methodologies that teach a computer to perform tasks as an “intelligent” person would. This includes, among others, neural networks or the “networks of hardware and software that approximate the web of neurons in the human brain” (Wired); machine learning, which is a technique for teaching machines to learn; and deep learning, which helps machines learn to go deeper into data to recognize patterns, etc. Within AI, machine learning includes algorithms that are developed to tell a computer how to respond to something by example.

The same site defines ML as,

Machine learning is an application of artificial intelligence (AI) that provides systems the ability to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on the development of computer programs that can access data and use it learn for themselves.

The process of learning begins with observations or data, such as examples, direct experience, or instruction, in order to look for patterns in data and make better decisions in the future based on the examples that we provide. The primary aim is to allow the computers learn automatically without human intervention or assistance and adjust actions accordingly.

A related and popular AI-derived technology, by the way, is Deep Learning. DL uses simulated neural networks to attempt to mimic the way a human brain learns and reacts. To quote from Rahul Sharma on Techgenix,

Deep learning is a subset of machine learning. The core of deep learning is associated with neural networks, which are programmatic simulations of the kind of decision making that takes place inside the human brain. However, unlike the human brain, where any neuron can establish a connection with some other proximate neuron, neural networks have discrete connections, layers, and data propagation directions.

Just like machine learning, deep learning is also dependent on the availability of massive volumes of data for the technology to “train” itself. For instance, a deep learning system meant to identify objects from images will need to run millions of test cases to be able to build the “intelligence” that lets it fuse together several kinds of analysis together, to actually identify the object from an image.

You can find AI, ML and DL everywhere, it seems. There are highly visible projects, like self-driving cars, or the speech recognition software inside Amazon’s Alexa smart speakers. That’s merely the tip of the iceberg. These technologies are embedded into the Internet of Things, into smart analytics and predictive analytics, into systems management, into security scanners, into Facebook, into medical devices.

A modern but highly visible application of AI/ML are chatbots – software that can communicate with humans via textual interfaces. Some companies use chatbots on their websites or on social media channels (like Twitter) to talk to customers and provide basic customer services. Others use the tech within a company, such as in human-resources applications that let employees make requests (like scheduling vacation) by simply texting the HR chatbot.

AI is also paying off in finance. The technology can help service providers (like banks or payment-card transaction clearinghouses) more accurately review transactions to see if they are fraudulent, and improve overall efficiency. According to John Rampton, writing for the Huffington Post, AI investment by financial tech companies was more than $23 billion in 2016. The benefits of AI, he writes, include:

Rampton also explains that AI can offer game-changing insights:

One of the most valuable benefits AI brings to organizations of all kinds is data. The future of Fintech is largely reliant on gathering data and staying ahead of the competition, and AI can make that happen. With AI, you can process a huge volume of data which will, in turn, offer you some game-changing insights. These insights can be used to create reports that not only increase productivity and revenue, but also help with complex decision-making processes.

What’s happening in fintech with AI is nothing short of revolutionary. That’s true of other industries as well. Instead of asking why so many developers, 29%, are focusing on AI, we should ask, “Why so few?”

Wireless Ethernet connections aren’t necessarily secure. The authentication methods used to permit access between a device and a wireless router aren’t very strong. The encryption methods used to handle that authentication, and then the data traffic after authorization, aren’t very strong. The rules that enforce the use of authorization and encryption aren’t always enabled, especially with public hotspots like in hotel, airports and coffee shops; the authentication is handled by a web browser application, not the Wi-Fi protocols embedded in a local router.

Wireless Ethernet connections aren’t necessarily secure. The authentication methods used to permit access between a device and a wireless router aren’t very strong. The encryption methods used to handle that authentication, and then the data traffic after authorization, aren’t very strong. The rules that enforce the use of authorization and encryption aren’t always enabled, especially with public hotspots like in hotel, airports and coffee shops; the authentication is handled by a web browser application, not the Wi-Fi protocols embedded in a local router.

Helping to solve those problems will be WPA3, an update to decades-old wireless security protocols. Announced by the Wi-Fi Alliance at CES in January 2018, the new standard is said to:

Four new capabilities for personal and enterprise Wi-Fi networks will emerge in 2018 as part of Wi-Fi CERTIFIED WPA3™. Two of the features will deliver robust protections even when users choose passwords that fall short of typical complexity recommendations, and will simplify the process of configuring security for devices that have limited or no display interface. Another feature will strengthen user privacy in open networks through individualized data encryption. Finally, a 192-bit security suite, aligned with the Commercial National Security Algorithm (CNSA) Suite from the Committee on National Security Systems, will further protect Wi-Fi networks with higher security requirements such as government, defense, and industrial.

This is all good news. According to Zack Whittaker writing for ZDNet,

One of the key improvements in WPA3 will aim to solve a common security problem: open Wi-Fi networks. Seen in coffee shops and airports, open Wi-Fi networks are convenient but unencrypted, allowing anyone on the same network to intercept data sent from other devices.

WPA3 employs individualized data encryption, which scramble the connection between each device on the network and the router, ensuring secrets are kept safe and sites that you visit haven’t been manipulated.

Another key improvement in WPA3 will protect against brute-force dictionary attacks, making it tougher for attackers near your Wi-Fi network to guess a list of possible passwords.

The new wireless security protocol will also block an attacker after too many failed password guesses.

A challenge for the use of WPA2 is that a defect, called KRACK, was discovered and published in October 2017. To quote my dear friend John Romkey, founder of FTP Software:

The KRACK vulnerability allows malicious actors to access a Wi-Fi network without the password or key, observe what connected devices are doing, modify the traffic amongst them, and tamper with the responses the network’s users receive. Everyone and anything using Wi-Fi is at risk. Computers, phones, tablets, gadgets, things. All of it. This isn’t just a flaw in the way vendors have implemented Wi-Fi. No. It’s a bug in the specification itself.

The timing of the WPA3 release couldn’t be better. But what about older devices. I have no idea how many of my devices — including desktops, phones, tablets, and routers — will be able to run WPA3. I don’t know if firmware updates will be automatically applied, or I will need to search them out.

What’s more, what about the millions of devices out there? Presumably new hotspots will downgrade to WPA2 if a device can’t support WPA3. (And the other way around: A new mobile device will downgrade to talk to an older or unpatched hotel room’s Wi-Fi router.) It could take ages before we reach a critical mass of new devices that can handle WPA3 end-to-end.

The Wi-Fi Alliance says that it “will continue enhancing WPA2 to ensure it delivers strong security protections to Wi-Fi users as the security landscape evolves.” Let’s hope that is indeed the case, and that those enhancements can be pushed down to existing devices. If not, well, the huge installed base of existing Wi-Fi devices will continue to lack real security for years to come.

A fascinating website, “How Did Arizona Get its Shape?,” shows that continental expansion in North America led to armed conflicts with Native American groups. Collectively known as the American Indian Wars, the conflicts began in the 1600s, and continued in various forms for the next several centuries. Multiple conflicts occurred during the U.S.-Mexican War, as westward expansion led to draconian policies levied by the United States against Indian nations, forcibly removing them from their homelands to make way for U.S. settlers.

Less than 15 years after the conflict with Mexico, the Civil War broke out between the United States (the Union) and the 11 states that seceded to form the Confederate States of America. Had the Confederacy won the war, Arizona would have been a slave state oriented to the south of New Mexico rather than to the west.

During the Civil War, in 1863, President Abraham Lincoln signed the Arizona Organic Act, which split Arizona and New Mexico into separate territories along the north-to-south border that remains today. The Act also outlawed slavery in Arizona Territory, a critical distinction as the question of whether new states or territories would allow slavery dominated U.S. westward expansion policies.

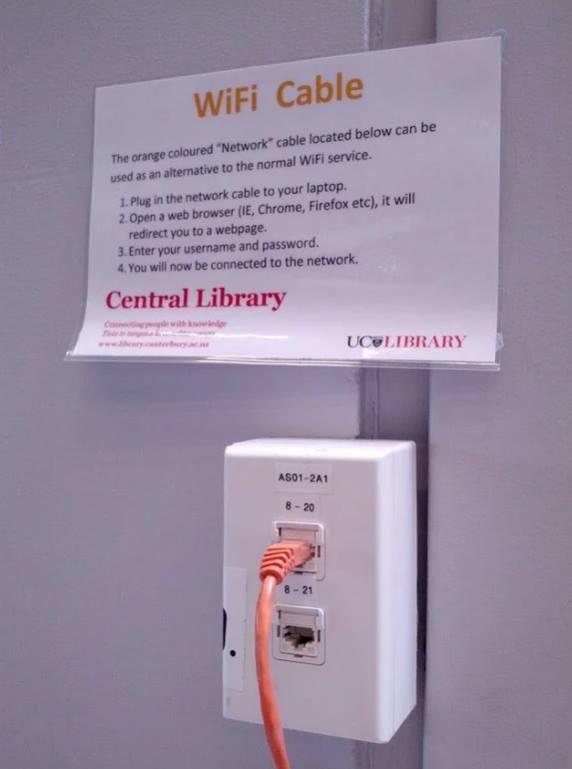

A friend insists that “the Internet is down” whenever he can’t get a strong wireless connection on his smartphone. With that type of logic, enjoy this photo found on the afore-mentioned Internet:

“Wi-Fi” is apparently now synonymous with “Internet” or “network.” It’s clear that we have come a long way from the origins of the Wi-Fi Alliance, which originally defined the term as meaning “Wireless Fidelity.” The vendor-driven alliance was formed in 1999 to jointly promote the broad family of IEEE 802.11 wireless local-area networking standards, as well as to insure interoperability through certifications.

But that was so last millennium! It’s all Wi-Fi, all the time. In that vein, let me propose three new acronyms:

You get the idea….

“Thou shalt not refer winkingly to my taking off my robe after worship as disrobing.” A powerful essay by Pastor Melissa Florer-Bixler, “10 commandments for male clergy,” highlights the challenges that female clergy endure in a patriarchal tradition — and one in which they are still seen as interlopers to church/synagogue power. And in this era of #metoo, it’s still not easy for women in any aspect of leadership, including Jewish leadership.

“Thou shalt not refer winkingly to my taking off my robe after worship as disrobing.” A powerful essay by Pastor Melissa Florer-Bixler, “10 commandments for male clergy,” highlights the challenges that female clergy endure in a patriarchal tradition — and one in which they are still seen as interlopers to church/synagogue power. And in this era of #metoo, it’s still not easy for women in any aspect of leadership, including Jewish leadership.

In my life and volunteer work, I have the honor to work with clergy. Many, but not all, are rabbis and cantors who come from the traditions of Reform Judaism. Quite a few are women. I also work with female Conservative and Reconstructionist rabbis and cantors, as well as female pastors and ministers. And of course, there are lots of male clergy from those traditions as well as the male-only Orthodox Jewish and Roman Catholic domains.

Congregations, schools, seminaries, communities, and non-profits enjoy abundant blessings when employing and engaging with female clergy. However, that doesn’t mean that women clergy are always seen as first-class members of their profession, or that they are treated with the same respect as their male counterparts.

There are too many assumptions, says Pastor Florer-Bixler, who ministers at the Raleigh Mennonite Church. Too many jokes. Too many subtle sexist put-downs. I’ve heard those myself. To be honest, there are some jokes and patronizing assumptions that I’ve made myself. While always meant kindly, my own words and attitude contributed to the problem. In her essay, Pastor Florer-Bixler writes about mansplaining, stereotypes, and the unspoken notion that religious institutions are essentially masculine:

In her recent lecture-essay “Women in Power: From Medusa to Merkel,” Mary Beard describes the pervasiveness of the cultural stereotype that power — from the halls of ancient Greece to the modern parliament — is masculine.

She cites a January 2017 article in The London Times about women front-runners for the positions of bishop of London, commissioner of the Metropolitan Police and chair of the BBC governing board. The headline read: “Women prepare for a power grab in church, police and BBC.”

Beard points out that “probably thousands upon thousands of readers didn’t bat an eyelid” at the suggestion that those seats of power were the property of men — possessions being “grabbed,” that is, taken away, by women.

Pastor Florer-Bixler writes about sexism, and I cringe at having seen many of these behaviors, and not speaking out.

Drawing attention to pregnancy, making sexualizing comments about “disrobing,” suggesting that a clergywoman should smile more, describing a female pastor’s voice as “shrill” — all expose the discomfort that men feel about women in “their” profession.

More than just ridiculous humiliations, these stereotypes affect the ministries and careers of women in church leadership. One colleague discovered that a pastor search committee was told that for the salary they were offering, they should expect only women to be willing to serve. The committee was livid — not at the pay gap but at the idea that they would have to consider only women.

Pastor Florer-Bixler offers some suggestions for making systemic improvements in how we — male clergy, lay leaders, everyone — should work with female clergy.

Men have all-male theological traditions and ministerial roles to which they can retreat. Not so female pastors.

If a woman stands up to this patriarchal tradition, she faces the accusation of intolerance. Women should not be expected to “get along” with sexist individuals, theologies, practices and institutions as if this were a price to be paid for church unity.

What is the way forward? For one, men must do better. When male pastors co-opt ideas that have come from female colleagues, they must reassign the insights. When they learn of pay gaps, they must address them.

When female clergy are outtalked or overtalked, male pastors must name the imbalance. They must read the sermons, theology and books of women. And decline to purchase books written by men who exclude women from the pulpit.

Women are addressing this as we always have: through constant negotiation between getting the job done and speaking out against what is intolerable. In the meantime, we create spaces where women can begin to speak the truth of our power to one another. For now, this is what we have.

The way forward will unquestioningly be slow, but we must be part of the solution. Let’s stop minimizing the problem or leaving it for someone else. Making a level playing field is more than men simply agreeing not to assault women, and this is not an issue for female clergy to address. Sexism is everyone’s issue. All of us must own it. And I, speaking as a male lay leader who works with many female clergy, pledge to do better.

It’s all about the tradeoffs! You can have the chicken or the fish, but not both. You can have the big engine in your new car, but that means a stick shift—you can’t have the V8 and an automatic. Same for that cake you want to have and eat. Your business applications can be easy to use or secure—not both.

It’s all about the tradeoffs! You can have the chicken or the fish, but not both. You can have the big engine in your new car, but that means a stick shift—you can’t have the V8 and an automatic. Same for that cake you want to have and eat. Your business applications can be easy to use or secure—not both.

But some of those are false dichotomies, especially when it comes to security for data center and cloud applications. You can have it both ways. The systems can be easy to use and maintain, and they can be secure.

On the consumer side, consider two-factor authentication (2FA), whereby users receive a code number, often by text message to their phones, which they must type into a webpage to confirm their identity. There’s no doubt that 2FA makes systems more secure. The problem is that 2FA is a nuisance for the individual end user, because it slows down access to a desired resource or application. Unless you’re protecting your personal bank account, there’s little incentive for you to use 2FA. Thus, services that require 2FA frequently aren’t used, get phased out, are subverted, or are simply loathed.

Likewise, security measures specified by corporate policies can be seen as a nuisance or an impediment. Consider dividing an enterprise network into small “trusted” networks, such as by using virtual LANs or other forms of authenticating users, applications, or API calls. This setup can require considerable effort for internal developers to create, and even more effort to modify or update.

When IT decides to migrate an application from a data center to the cloud, the steps required to create API-level authentication across such a hybrid deployment can be substantial. The effort required to debug that security scheme can be horrific. As for audits to ensure adherence to the policy? Forget it. How about we just bypass it, or change the policy instead?

Multiply that simple scenario by 1,000 for all the interlinked applications and users at a typical midsize company. Or 10,000 or 100,000 at big ones. That’s why post-mortem examinations of so many security breaches show what appears to be an obvious lack of “basic” security. However, my guess is that in many of those incidents, the chief information security officer or IT staffers were under pressure to make systems, including applications and data sources, extremely easy for employees to access, and there was no appetite for creating, maintaining, and enforcing strong security measures.

Read more about these tradeoffs in my article on Forbes for Oracle Voice: “You Can Have Your Security Cake And Eat It, Too.”

I’m #1! Well, actually #4 and #7. During 2017, I wrote several article for Hewlett Packard Enterprise’s online magazine, Enterprise.nxt Insights, and two of them were quite successful – named as #4 and #7 in the site’s list of Top 10 Articles for 2017.

Article #7 was, “4 lessons for modern software developers from 1970s mainframe programing.” Based entirely on my own experiences, the article began,

Article #7 was, “4 lessons for modern software developers from 1970s mainframe programing.” Based entirely on my own experiences, the article began,

Eight megabytes of memory is plenty. Or so we believed back in the late 1970s. Our mainframe programs usually ran in 8 MB virtual machines (VMs) that had to contain the program, shared libraries, and working storage. Though these days, you might liken those VMs more to containers, since the timesharing operating system didn’t occupy VM space. In fact, users couldn’t see the OS at all.

In that mainframe environment, we programmers learned how to be parsimonious with computing resources, which were expensive, limited, and not always available on demand. We learned how to minimize the costs of computation, develop headless applications, optimize code up front, and design for zero defects. If the very first compilation and execution of a program failed, I was seriously angry with myself.

Please join me on a walk down memory lane as I revisit four lessons I learned while programming mainframes and teaching mainframe programming in the era of Watergate, disco on vinyl records, and Star Wars—and which remain relevant today.

Article #4 was, “The OWASP Top 10 is killing me, and killing you!” It began,

Software developers and testers must be sick of hearing security nuts rant, “Beware SQL injection! Monitor for cross-site scripting! Watch for hijacked session credentials!” I suspect the developers tune us out. Why? Because we’ve been raving about the same defects for most of their careers. Truth is, though, the same set of major security vulnerabilities persists year after year, decade after decade.

The industry has generated newer tools, better testing suites, Agile methodologies, and other advances in writing and testing software. Despite all that, coders keep making the same dumb mistakes, peer reviews keep missing those mistakes, test tools fail to catch those mistakes, and hackers keep finding ways to exploit those mistakes.

One way to see the repeat offenders is to look at the OWASP Top 10, a sometimes controversial ranking of the 10 primary vulnerabilities, published every three or four years by the Open Web Application Security Project.

The OWASP Top 10 list is not controversial because it’s flawed. Rather, some believe that the list is too limited. By focusing only on the top 10 web code vulnerabilities, they assert, it causes neglect for the long tail. What’s more, there’s often jockeying in the OWASP community about the Top 10 ranking and whether the 11th or 12th belong in the list instead of something else. There’s merit to those arguments, but for now, the OWASP Top 10 is an excellent common ground for discussing security-aware coding and testing practices.

Click the links (or pictures) above and enjoy the articles! And kudos to my prolific friend Steven J. Vaughan-Nichols, whose articles took the #3, #2 and #1 slots. He’s good. Damn good.

Amazon says that that a cloud-connected speaker/microphone was at the top of the charts: “This holiday season was better than ever for the family of Echo products. The Echo Dot was the #1 selling Amazon Device this holiday season, and the best-selling product from any manufacturer in any category across all of Amazon, with millions sold.”

Amazon says that that a cloud-connected speaker/microphone was at the top of the charts: “This holiday season was better than ever for the family of Echo products. The Echo Dot was the #1 selling Amazon Device this holiday season, and the best-selling product from any manufacturer in any category across all of Amazon, with millions sold.”

The Echo products are an ever-expanding family of inexpensive consumer electronics from Amazon, which connect to a cloud-based service called Alexa. The devices are always listening for spoken commands, and will respond through conversation, playing music, turning on/off lights and other connected gadgets, making phone calls, and even by showing videos.

While Amazon doesn’t release sales figures for its Echo products, it’s clear that consumers love them. In fact, Echo is about to hit the road, as BMW will integrate the Echo technology (and Alexa cloud service) into some cars beginning this year. Expect other automakers to follow.

Why the Echo – and Apple’s Siri and Google’s Home? Speech.

The traditional way of “talking” to computers has been through the keyboard, augmented with a mouse used to select commands or input areas. Computers initially responded only to typed instructions using a command-line interface (CLI); this was replaced in the era of the Apple Macintosh and the first iterations of Microsoft Windows with windows, icons, menus, and pointing devices (WIMP). Some refer to the modern interface used on standard computers as a graphic user interface (GUI); embedded devices, such as network routers, might be controlled by either a GUI or a CLI.

Smartphones, tablets, and some computers (notably running Windows) also include touchscreens. While touchscreens have been around for decades, it’s only in the past few years they’ve gone mainstream. Even so, the primary way to input data was through a keyboard – even if it’s a “soft” keyboard implemented on a touchscreen, as on a smartphone.

Enter speech. Sometimes it’s easier to talk, simply talk, to a device than to use a physical interface. Speech can be used for commands (“Alexa, turn up the thermostat” or “Hey Google, turn off the kitchen lights”) or for dictation.

Speech recognition is not easy for computers; in fact, it’s pretty difficult. However, improved microphones and powerful artificial-intelligence algorithms make speech recognition a lot easier. Helping the process: Cloud computing, which can throw nearly unlimited resources at speech recognition, including predictive analytics. Another helper: Constrained inputs, which means that when it comes to understanding commands, there are only so many words for the speech recognition system to decode. (Free-form dictation, like writing an essay using speech recognition, is a far harder problem.)

Speech recognition is only going to get better – and bigger. According to one report, “The speech and voice recognition market is expected to be valued at USD 6.19 billion in 2017and is likely to reach USD 18.30 billion by 2023, at a CAGR of 19.80% between 2017 and 2023. The growing impact of artificial intelligence (AI) on the accuracy of speech and voice recognition and the increased demand for multifactor authentication are driving the market growth.”

Helping the process: Cloud computing, which can throw nearly unlimited resources at speech recognition, including predictive analytics. Another helper: Constrained inputs, which means that when it comes to understanding commands, there are only so many words for the speech recognition system to decode. (Free-form dictation, like writing an essay using speech recognition, is a far harder problem.)

Speech recognition is only going to get better – and bigger. According to one report, “The speech and voice recognition market is expected to be valued at USD 6.19 billion in 2017and is likely to reach USD 18.30 billion by 2023, at a CAGR of 19.80% between 2017 and 2023. The growing impact of artificial intelligence (AI) on the accuracy of speech and voice recognition and the increased demand for multifactor authentication are driving the market growth.” The report continues:

“The speech recognition technology is expected to hold the largest share of the market during the forecast period due to its growing use in multiple applications owing to the continuously decreasing word error rate (WER) of speech recognition algorithm with the developments in natural language processing and neural network technology. The speech recognition technology finds applications mainly across healthcare and consumer electronics sectors to produce health data records and develop intelligent virtual assistant devices, respectively.

“The market for the consumer vertical is expected to grow at the highest CAGR during the forecast period. The key factor contributing to this growth is the ability to integrate speech and voice recognition technologies into other consumer devices, such as refrigerators, ovens, mixers, and thermostats, with the growth of Internet of Things.”

Right now, many of us are talking to Alexa, talking to Siri, and talking to Google Home. Back in 2009, I owned a Ford car that had a primitive (and laughably inaccurate) infotainment system – today, a new car might do a lot better, perhaps due to embedded Alexa. Will we soon be talking to our ovens, to our laser printers and photocopiers, to our medical implants, to our assembly-line equipment, and to our network infrastructure? It wouldn’t surprise Alexa in the least.