Microsoft’s woes are too big to ignore.

Problem area number one: The high-profile Surface tablet/notebook device is flopping. While the 64-bit Intel-based Surface Pro hasn’t sold well, the 32-bit ARM-based Surface RT tanked. Big time. Microsoft just slashed its price — maybe that will help. Too little too late?

To quote from Nathan Ingraham’s recent story in The Verve,

Microsoft just announced earnings for its fiscal Q4 2013, and while the company posted strong results it also revealed some details on how the Surface RT project is costing the business money. Microsoft’s results showed a $900 million loss due to Surface RT “inventory adjustments,” a charge that comes just a few days after the company officially cut Surface RT prices significantly. This $900 million loss comes out of the company’s total Windows revenue, though its worth noting that Windows revenue still increased year-over-year. Unfortunately, Microsoft still doesn’t give specific Windows 8 sales or revenue numbers, but it probably performed well this quarter to make up for the big Surface RT loss.

At the end of the day, though, it looks like Microsoft just made too many Surface RT tablets — we heard late last year that Microsoft was building three to five million Surface RT tablets in the fourth quarter, and we also heard that Microsoft had only sold about one million of those tablets in March. We’ll be listening to Microsoft’s earnings call this afternoon to see if they further address Surface RT sales or future plans.

Microsoft has spent heavily, and invested a lot of its prestige, in the Surface. It needs to fix Windows 8 and make this platform work.

Problem are number two: A dysfunctional structure. A recent story in the New York Times reminded me of this 2011 cartoon describing six tech company’s charts. Look at Microsoft. Yup.

Steve Ballmer, who has been CEO since 2000, is finally trying to do something about the battling business units. The new structure, announced on July 11, is called “One Microsoft,” and in a public memo by Ballmer, the goal is described as:

Going forward, our strategy will focus on creating a family of devices and services for individuals and businesses that empower people around the globe at home, at work and on the go, for the activities they value most.

Editing and restructuring the info in that memo somewhat, here’s what the six key non-administrative groups will look like:

Operating Systems Engineering Group will span all OS work for console, to mobile device, to PC, to back-end systems. The core cloud services for the operating system will be in this group.

Devices and Studios Engineering Group will have all hardware development and supply chain from the smallest to the largest devices, and studios experiences including all games, music, video and other entertainment.

Applications and Services Engineering Group will have broad applications and services core technologies in productivity, communication, search and other information categories.

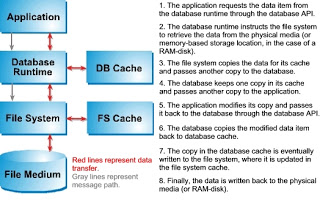

Cloud and Enterprise Engineering Group will lead development of back-end technologies like datacenter, database and specific technologies for enterprise IT scenarios and development tools, plus datacenter development, construction and operation.

Advanced Strategy and Research Group will be focused on the intersection of technology and policy, and will drive the cross-company looks at key new technology trends.

Business Development and Evangelism Group will focus on key partnerships especially with innovation partners (OEMs, silicon vendors, key developers, Yahoo, Nokia, etc.) and broad work on evangelism and developer outreach.

If implemented as described, this new organization should certainly eliminate waste, including redundant research and product developments. It might improve compatibility between different platforms and cut down on mixed messages.

However, it may also constraint the freedom to innovate, and promote the unhealthy “Windows everywhere” philosophy that has hamstrung Microsoft for years. It’s bad to spend time creating multiple operating systems, multiple APIs, multiple dev tool chains, multiple support channels. It’s equally bad to make one operating system, API set, dev tool chain and support channel fit all platforms and markets.

Another concern is the movement of developer outreach into a separate group that’s organizationally distinct from the product groups. Will that distance Microsoft’s product developers from customers and ISVs? Maybe. Will the most lucrative products get better developer support? Maybe.

Microsoft has excelled in developer support, and I’d hate to see that suffer as part of the new strategy.

Read Steve Ballmer’s memo. What do you think?

Z Trek Copyright (c) Alan Zeichick

We need all the technical talent we can get. Whether we are talking developers, architects, network staff, IT admins, managers, hardware, software or firmware, the more women in technology, the better. For everyone – for companies, for customers, for women and for men.

We need all the technical talent we can get. Whether we are talking developers, architects, network staff, IT admins, managers, hardware, software or firmware, the more women in technology, the better. For everyone – for companies, for customers, for women and for men.

I’ve had the opportunity to meet and listen to Steve Wozniak several times over the years. He’s always funny and engaging, and his scriptless riffs get better all the time. With this one, he had me rolling in the aisle.

I’ve had the opportunity to meet and listen to Steve Wozniak several times over the years. He’s always funny and engaging, and his scriptless riffs get better all the time. With this one, he had me rolling in the aisle. It looks like the tech industry is hiring more women. Maybe. Maybe not. The statistics are hard to interpret. Also, it’s unclear if the newly hired women are performing technical or other jobs.

It looks like the tech industry is hiring more women. Maybe. Maybe not. The statistics are hard to interpret. Also, it’s unclear if the newly hired women are performing technical or other jobs.

If there’s no news… well, let’s make some up. That’s my thought upon reading all the stories about Apple’s forthcoming iWatch – a product that, as far as anyone knows, doesn’t exist.

If there’s no news… well, let’s make some up. That’s my thought upon reading all the stories about Apple’s forthcoming iWatch – a product that, as far as anyone knows, doesn’t exist.

Today’s word is “open.” What does open mean in terms of open platforms and open standards? It’s a tricky concept. Is Windows more open than Mac OS X? Is Linux more open than Solaris? Is Android more open than iOS? Is the Java language more open than C#? Is Firefox more open than Chrome? Is SQL Server more open than DB2?

Today’s word is “open.” What does open mean in terms of open platforms and open standards? It’s a tricky concept. Is Windows more open than Mac OS X? Is Linux more open than Solaris? Is Android more open than iOS? Is the Java language more open than C#? Is Firefox more open than Chrome? Is SQL Server more open than DB2? In 1996, according to the Wikipedia,

In 1996, according to the Wikipedia,