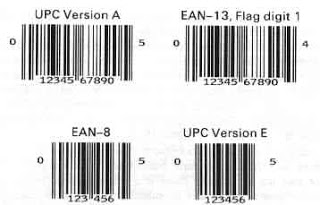

Let us spend a moment pondering the barcode. Barcodes are everywhere. Everywhere. Every time you visit a grocery store, cashiers use barcodes to ring up your purchases. That is, unless you choose the self checkout, where you scan them yourself. Airlines send barcodes to phones for paperless checking. Barcodes track warehouse inventory and adorn corporate asset tags.

Let us spend a moment pondering the barcode. Barcodes are everywhere. Everywhere. Every time you visit a grocery store, cashiers use barcodes to ring up your purchases. That is, unless you choose the self checkout, where you scan them yourself. Airlines send barcodes to phones for paperless checking. Barcodes track warehouse inventory and adorn corporate asset tags.

The new QR codes appear in magazine ads, bus stop signs, overhead billboards. Aim your mobile phone camera and click to visit a website. At Google IO in May, attendee badges had QR codes linked to vCard profiles — instead of exchanging business cards, you just zap someone’s card with your phone and load their info right into your contact database. After Google IO, I immediately ordered a new set of business cards with a QR code printed on the back.

Decades ago, computer magazines printed source code and data sets using a barcode format called Cauzin Softstrip. It let pre-Internet PCs load applications using a handheld wand connected to the RS-232 serial port.

Back in the 1980s I had a barcode reader for my HP-41C programmable calculator. While the HP-41C generally used magnetic cards for persistent storage, printed barcode sheets were used for ass distribution of binary information, including programs and data. (I still use the HP-41C, but the barcode reader is long gone.)

And don’t get me started about the :CueCat.

Barcodes are so ubiquitous that I’ve never given them even a second’s thought. They are simply a convenience when they work properly, an annoyance if they don’t scan. Thus, I was taken by surprise by the passing of Alan Haberman, lauded as the man who popularized the barcode, at least for supermarkets and other point-of-purchase applications.

While the late Mr. Haberman didn’t invent the barcode itself. he did push for the adoption of the IBM-invented Universal Product Code (UPC), which ended up as the standard for point-of-sale scanners. According to his obituary in the New York Times the UPC barcode made its public debut in 1974.

In a world of seemingly more advanced technologies – the mag strip, the EMV chip in European credit cards, RFID systems and the emerging near-field communications systems — the least common denominator is the low-tech barcode. Easy to create, easy to reproduce, easy to scan… what’s not to like? I’m sure we’ll be using UPC barcodes and QR codes for many decades to come, long after some of newer technologies have disappeared.

Last week, my friend Adam Kolawa, founder of Parasoft, passed away suddenly. Adam, whom I’ve known for over a decade, was a young man, only 53 years old.

Last week, my friend Adam Kolawa, founder of Parasoft, passed away suddenly. Adam, whom I’ve known for over a decade, was a young man, only 53 years old.

In a brief statement, Parasoft described Adam’s legacy as

In 1983, Kolawa came to the United States from Poland to obtain a Ph.D. in Theoretical Physics from the California Institute of Technology. While at Caltech, he worked with Geoffrey Fox and helped design and implement the Intel hypercube parallel computer. In 1987, he founded Parasoft with four friends from Caltech. Initially, the company focused on parallel processing technologies. Kolawa transitioned the company from a parallel processing system producer, to a software development tool producer, to a provider of software solutions and services that help organizations deliver better software faster.

In my work at SD Times, I’ve had many occasions to visit with Adam. Sometimes we’d hang out in the Bay Area, sometimes down at Parasoft’s offices in Pasadena, Calif., sometimes at conferences – we invited him to keynote our Software Test & Performance Conference in 2005.

Over the years, our relationship was occasionally… how to put it delicately… turbulent. Adam was one of the smartest people I’ve ever met – brilliant. Passionate about software development, about software quality, and about his company, Parasoft. Whenever we wrote something in SD Times that he felt maligned his company (or worse, ignored it), I could expect an email from someone saying that Adam was on the warpath again.

However, because I am equally passionate about SD Times’ editorial integrity — which he respected — a brief phone chat would always set things right again. I was sincerely honored when he invited me to write the foreward and front-cover blurb for his book, “Bulletproofing Web Applications” (M&T Books, 2002).

Adam’s passion was unrelenting, and his feelings strong. But he was also warm and incredibly funny. It became a tradition to swing through Pasadena on my way home from SoCal, just to say “Hello.”

Adam Kolawa was a brilliant man, a bright light in our industry, a kind soul, and a good friend. May his memory be a blessing to all who knew him.

Novell is now part of Attachmate. Should you care? Yes.

Novell is now part of Attachmate. Should you care? Yes.

The deal closed on Wednesday, Apr. 27. “Novell, Inc., the leader in intelligent workload management, today announced that it has completed its previously announced merger, whereby Attachmate Corporation acquired Novell for $6.10 per share in cash. Novell is now a wholly owned subsidiary of The Attachmate Group, the parent company of Attachmate Corporation. “

Novell means lots of different things to different people. To me, the word “Novell” will always followed by “NetWare,” just as surely as “Lotus” is followed by “1-2-3.” (Yes, Alan is a dinosaur.)

To its more contemporary followers, Novell means two big open-source projects: SUSE Linux and Mono, an open-source implementation of the Microsoft .NET Framework and Common Language Runtime.

There are lots of other Novell products, to be sure – ZENworks, data center management tools, GroupWise, etc. See the big long list at http://www.novell.com/products/.

However, the only two that truly matter, from the software development perspective at least, and SUSE Linux and Mono.

SUSE is one of the Big Two enterprise-class distributions, along with Red Hat. (There are oodles of consumer-facing distros, like Ubuntu.) When it came to running on large hardware, I’ve always considered SUSE to the de facto leader. Given that Attachmate serves the same large enterprise customer base, I’m confident that SUSE will continue to evolve as a strong platform.

Mono is a concern. First, I’m not sure if Mono has a sustainable business model – or that it even has a business model at all. Second, Microsoft was a key behind-the-scenes player in Attachmate’s acquisition of Novell – see my comments from last November. It’s not in Microsoft’s best interest for Mono to exist. After all, if you can run .NET applications on Linux, you’re not locked into buying Windows Server.

My prediction: Under Attachmate, SUSE will flourish, and Mono will wither. I expect Mono to suffer intentional neglect, rather than a bold stroke, until it weakens, loses relevance, and fades away.

This is a depressing thought. It’s almost as depressing as that Overstock.com purchased the naming rights to the stadium used by the Oakland A’s baseball team and the Oakland Raiders football team. The idea of visiting “Overstock.com Coliseum” is somewhat nauseating.

A few years ago, a multi-day power failure on Long Island left our offices in the dark – including the Microsoft Exchange Server in our server closet. After that experience, and a few others involving the local electrical grid, we moved our mail system into the cloud for improved reliability.

A few years ago, a multi-day power failure on Long Island left our offices in the dark – including the Microsoft Exchange Server in our server closet. After that experience, and a few others involving the local electrical grid, we moved our mail system into the cloud for improved reliability.

This week, a failure of some sort took down one of the best-known cloud services. As I write this on Friday morning (April 22), Amazon is still struggling to fix Amazon Web Services, which has big problems in its Northern Virginia data center. Some services came back online on Thursday, but others are still down.

As of the time of writing, Amazon has not provided any sort of explanation for the outage, or disclosed how many of its customers were affected. The best customer reports are on Twitter. But according to the AWS Service Health Dashboard, there are issues with the company’s Electric Compute Cloud, Relational Database Service, Elastic MapReduce, Cloud Formation and Elastic Beanstalk resources in its US-EAST-1 region. The problems also include high latency and error rates, instance launches, API accesses, database connectivity… the list goes on and on.

Are/were you affected by the AWS failure? Probably. We were.

SD Times doesn’t use AWS for any of our internal IT needs. We have some systems that use Google’s Web services, but have nothing stored in Amazon, or that use the AWS APIs directly.

On the other hand, for our technical conferences (like SPTechCon for SharePoint developers and admins), we like to create funny promotional videos using Xtranormal. Those videos (again, as I write this) are down, and have been for 21 hours. As the company tweeted on Thursday, “Hang tight, peeps. Those pesky ‘Amazon’ issues you might have heard about this morning affected us as well.”

The good news is that we know one thing for sure that Skynet – the global computer system in the Terminator movies – didn’t take down Amazon Web Services. One of the company’s support team, Luke@AWS, posted,

From the information I have and to answer your questions, SkyNet did not have anything to do with the service event at this time.

The bad news is that, with the exception of purely siloed enterprise IT systems, we’re all using cloud computing, all the time. Even if we’re not subscribing to those services ourselves, the chain of dependencies, thanks to APIs, Web services, RSS feeds, and external data providers, means that our IT is only as strong as the weakest link.

I was surprised to read that MKS Inc. was being purchased by PTC (a firm I’d never heard of).

I was surprised to read that MKS Inc. was being purchased by PTC (a firm I’d never heard of).

Most people today know MKS as a seller of enterprise application lifecycle management tools. The Waterloo, Ont.-based company’s flagship is Integrity, an ALM suite that covers requirements, modeling, coding and testing. While I’ve never used Integrity, I’ve heard good things about it over the years.

To me, however, MKS will always be Mortice Kern Systems, makers of MKS Toolkit. The toolkit comprised a set of hundreds Unix-inspired command-line utilities for DOS – like grep and awk, but also essential tools like vi. The built-in set of DOS command-line tools was very minimal, and didn’t come anywhere near the power and flexibility of what was available for Unix.

As a developer who used a PC during the early 1980s, the MKS Toolkit made a clumsy environment bearable. In fact, as a judge for the 2007 Jolt Awards, published by Software Development Magazine (no relation to SD Times), I wrote up a winner as:

MKS Toolkit 5.2

Mortice Kern Systems Inc.

Once you’ve used UNIX, you can never forget the power of a real command line. That’s where MKS Toolkit comes in. MKS Toolkit is long known for bringing the power of UNIX to DOS and OS/2. Now version 5.2 brings the same benefits to 32-bit Windows workstations.

With MKS Toolkit, you get all the familiar UNIX commands like awk, grep, ps, tar, pax, cpio, make, sort, and the Korn shell. But with this new Windows version, you also get Perl, web (which lets you access web pages from the command line), and Windows GUI versions of vi and vdiff, and more.

If you don’t know what those UNIX functions are, MKS Toolkit’s not for you. But if you’re itching for the command line–but find a Microsoft mouse instead–then this utility suite gives you the best of all possible worlds.

MKS ditched the “Mortice Kern Systems” name a decade ago, but still sells MKS Toolkit, albeit on a different website from its enterprise solutions. Integrity is at www.mks.com, but MKS Toolkit is at www.mkssoftware.com.

I haven’t used MKS Toolkit in many years, but am glad that the product is still available. Let’s hope PTC keeps MKS Toolkit going.

I was recently at a party – okay, the speaker’s party at iPhone/iPad DevCon last week – where it was obvious that the speakers neatly bifurcated into two groups. There were those who recognized Mike Oldfield’s Tubular Bells playing on the stereo, and those who didn’t.

I was recently at a party – okay, the speaker’s party at iPhone/iPad DevCon last week – where it was obvious that the speakers neatly bifurcated into two groups. There were those who recognized Mike Oldfield’s Tubular Bells playing on the stereo, and those who didn’t.

(You could also identify the Tubular Bells cognoscenti by the relative abundance of gray hair, compared to those grooving to the sounds of Girl Talk.)

Here’s another litmus test: What does “UX” mean to you? The definition may be revealing about how our world is changing.

If you see UX and think Unix (or perhaps UNIX), then you belong to the world of classic IT. Maybe you’re thinking about HP-UX, which is Hewlett-Packard’s version. Or A/UX, which I actually had running on a Mac Quadra a billion years ago. Either way, until recently, for me UX==Unix.

If you see UX and think User Experience, not Unix, you’re part of a newer generation. Perhaps you were inspired by Donald Norman’s classic “The Design of Everyday Things.” Maybe you’re thinking about AJAX or rich Internet applications. UI is so passé – it’s all UX now. They say there’s an app for that.

Unix lived and died by the command line – and by the ubiquitous and essential man pages, which told you everything you everything. It was a world where a single mistake – forgetting an argument, making an argument upper-case instead of lower-case, putting parameters in the wrong order – would lead to disaster. If you knew what you were doing, you were a guru. If you didn’t, you were doomed.

Today’s User Experience-driven world is diametrically opposite. Android phones, Web applications and iPads have minimal controls. Everything should be modeless, whenever possible; everything should be obvious; pop-ups are bad; grayed-out options are bad. If it’s not intuitive, you’ve failed. If you ever watched a child pick up an iPhone or an iPad for the first time, launch an app and start painting or playing, you’ve seen what UX is all about.

There are no man pages for mobile applications or modern interactive Web applications. In fact, often there’s no help system or README.TXT or any end-user documentation whatsoever.

In object-oriented development, it’s a bad practice to overload an operator or a method with one that does the exact opposite function. (It’s like having to press the Start button to shut down Windows – it’s silly and counterintuitive).

Yet that’s exactly what we’ve done by overloading UX. We’ve taken an abbreviation for Unix, traditionally the operating system that requires the most up-front knowledge by its end users. And it now refers to goal of ensuring that users can simply figure out how to use a system intuitively, without any training or documentation or anything.

Like one of Girl Talk’s mashups, an app with a great UX should simply get you moving. What’s not to like?

The lawsuits flying around Silicon Valley are getting ridiculous.

The lawsuits flying around Silicon Valley are getting ridiculous.

• Microsoft is suing Barnes & Noble because Microsoft says that Android infringes Microsoft patents, and B&N’s Nook e-reader is based on Android. Thus, B&N must be forced to license Microsoft patents.

• Oracle is suing Google because Oracle says that Android’s Jalvik Java Virtual Machine infringes some of Sun’s JVM patents.

• Apple is suing Amazon.com because Apple says that “App Store is an Apple trademark, and thus Amazon must not use “Amazon Appstore” for its Android, well, app store.

• Microsoft is suing Apple because Microsoft says that Apples “App Store” trademark uses generic English words, and thus shouldn’t be trademarked. Of course, Microsoft has rigorously defended its trademarks of Windows, Word and Office.

• And recently, Microsoft filed a European antitrust complaint against – guess who – Google.

The latest Microsoft complaint reveals that as worried as Redmond has been about search, it’s also concerned about Windows Phone. According to this post by Brad Smith, Microsoft’s General Counsel, there are six allegations:

1. Google’s search engine has an unfair advantage over Microsoft’s Bing and other search engines when it comes to crawling YouTube content.

2. Google refuses to allow Windows Phone to access YouTube metadata, but allows Android and iPhone devices to do so.

3. Google is attempting to block access to content owned by book publishers by seeking exclusive rights to scan and distribute so-called orphaned books – those still under copyright, but for which the copyright owner can’t be found.

4. Google unfairly restricts its advertisers’ ability to use data and analytics in an interoperable way with competing search platforms, specifically Microsoft’s adCenter.

5. Google contractually requires European websites that use a Google search box from also using a competing search box. Microsoft says this not only freezes out Bing directly, but also blocks web publishers from using Windows Live Services, which embeds a Bing search box.

6. Google discriminates against its own competitors (like Microsoft) by making it very expensive for them to buy good ad positions using Google’s ad tools.

Is there any truth to these allegations? That’s for the European Commission to decode. But what it all comes down to is: Microsoft can’t compete, so it’s going to the courts. The company has the right to sue, of course.

But I hope that if Microsoft succeeds in overturning Apple’s “App Store” trademark, the decision is broad enough to overturn Windows, Word and Office as well. Fair is fair.

When you’re building a website or a Web application – how big is the user’s screen going to be? That used to be an easy question to answer, but now it’s getting a lot harder. And that means a lot of extra presentation-layer work for someone on your team.

When you’re building a website or a Web application – how big is the user’s screen going to be? That used to be an easy question to answer, but now it’s getting a lot harder. And that means a lot of extra presentation-layer work for someone on your team.

When I first started building static websites, back in the Neolithic era, the typical desktop or a notebook had a screen that was 1024×768 pixels or 1280×1024. There were some outliers, mainly at 800×600. Based on that, page design was set to be somewhere around 700 pixels wide. That let everyone see everything, without worrying about horizontal scrolling.

Since then, three things that happened to change those assumptions:

1. Wide-screen displays have become more common, which means that monitors are shorter and wider. Designs have to take both dimensions into account. My 15” MacBook Pro and 13” MacBook Air, for example, are both 1440×900. This can be a problem when showing images that were formatted for a 1024-pixel-high display.

2. Huge monitors are becoming common. Modern desktops monitors (or external displays for laptops) seem to start at 1680×1050, and go all the way up to 2560×1600. A 700-pixel-wide web page is lost in all that real estate – but what behavior does your application exhibit if the browser window is widened?

3. Mobile devices are all over the map. With handsets, an Apple iPhone 3GS screen is 480×320, the iPhone 4 is 960×640, Motorola’s Droid2 is 854×480, and HTC’s HD7 is 800×480. For tablets, the iPad is 1024×768, the Samsung Galaxy Tab is 1024×600, and the Motorola Xoom is 1280×800. Most mobile browsers dynamically scale down wider pages or graphics to fit, but still, it’s good to know what you’re shooting for.

Here’s a snapshot of data taken this month from one particular source – the sdtimes.com website. It’s a “focus group of one,” and may not reflect what you get on your own website or Web applications, but it’s a data point to think about.

Craig Reino, SD Times’ Director of IT, told me that, “Resolutions are undergoing a revolution. Where there used to be a clear majority among our browsers on sdtimes.com there is now a wide range of resolutions. The most popular has only 14% and it is 1280×1024.”

Specifically, here are the top 10 screen resolutions, pulled from our web analytics:

1280×1024: 14.02%

1280×800: 12.87%

1440×900: 10.62%

1680×1050 10.17%

1024×768: 8.26%

1920×1200: 7.19%

1920×1080: 7.00%

1366×768: 6.86%

1600×900: 2.33%

320×480: 2.03%

Does this match your experience?

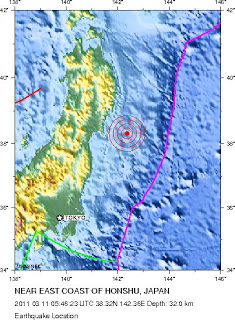

The earthquake and tsunami in Japan were horrific in scope. The subsequent challenges with the Japanese nuclear power plants has magnified the disaster. Where will it end? How many lives were lost, how many families shattered? As I write this, the world has many questions, but sadly, not many answers.

The earthquake and tsunami in Japan were horrific in scope. The subsequent challenges with the Japanese nuclear power plants has magnified the disaster. Where will it end? How many lives were lost, how many families shattered? As I write this, the world has many questions, but sadly, not many answers.

Like many of you, my thoughts first turned to my friends and family members who live in Japan. I was gratified to quickly learn that all were safe – in large part, due to social networking. Rather than jamming up phone lines, for example, I could see a cousin’s Facebook posts and see that he, his wife and his young daughter were out of harm’s way.

Natural disasters can strike anywhere. Earthquakes, of course, are an ever-present danger in Northern California, where I live. Flood, fires, hurricanes, airplane crashes, those are only some of the hazards. In some countries, of course, there’s also the threat of war, of oppression, of rebellion – we’re reading about that in the news headlines. It’s a dangerous world we live in. For too many of us, surrounded by all our luxuries and technology, it’s easy to forget that.

There’s a lot that we could talk about today. About how social media is making the world smaller. How cloud-based apply and backups can help a business (or a family) recover quickly in the event of a property loss. About how distributed software development lets us experience more of the world’s diversity than traditional on-premise organizational models.

But instead I’ll say: Be safe.

Archeologists criticize Smithsonian over Java Objects.

Archeologists criticize Smithsonian over Java Objects.

That headline in the New York Times’s Arts Beat section gave me pause. What type of Java objects are the historians upset about? Are Lara Croft or Indiana Jones going after some valuable POJOs or something?

The story made it clear that these Java objects aren’t instantiations of members of a class that contain their own state and inherited behaviors, but rather to valuable artifacts salvaged from a shipwreck near Indonesia. Oops. My bad.

My excuse: As I write this, Java is pretty high in my mental stack, thanks to our just-completed AnDevCon: The Android Developer Conference.The conference was a tremendous success, far exceeding our expectations for a debut event. (Mark your calendar for AnDevCon II, November 7-9, 2011, in the S.F. Bay Area.)

The bad news is that some of the classes were too crowded, and that the conference hotel’s wireless infrastructure buckled and collapsed under the strain of a myriad laptops and mobile devices. Even the local 3G carrier networks crumbled under the load.

The good news is that attendees learned a lot about Android development, many receiving their first real hands-on experience with Honeycomb – that’s Android 3.0, running on Motorola’s Xoom tablet. (My attempts to integrate a prototype Motorola tablet with a Canon EF 24-70 f/2.8L to create a Xoom lens were, alas, unsuccessful.)

But seriously: the post-Oracle-acquisition concerns that “Java is dead!” are washed away when you consider Android’s rapid trajectory. Oracle’s lawsuit notwithstanding, Java is in tremendous demand as the native language for Android apps. Sure, the installed base of iPhone handsets is huge, and when it coms to tablets, Apple’s iPad has a huge head. Not only that, but the faster and thinner iPad 2 is sure to sell well, even though it’s merely an incremental upgrade. {link to last week’s take} But the wind is currently at Android’s back.

As someone who keeps an iPhone 4 in one pocket and an HTC Evo in the other, clearly there are benefits to both platforms. It’s to our benefit as developers and consumers to have the Mobile Wars continue. Competition, innovation, that’s good for us, and good for the evolving markets.

Perhaps someday we’ll see Android phones being dug up by archeologists… and stored, together with the iPhone, in the Smithsonian. Wouldn’t that be something?

Earlier this week, Apple announced the iPad 1.1, the long-awaited successor to its magical tablet.

Earlier this week, Apple announced the iPad 1.1, the long-awaited successor to its magical tablet.

The iPad 1.1 is very similar to the original iPad (which I’ll call the “iPad 1”), except that it’s available in white. It’s a bit thinner. It’s a bit lighter. It has a dual-core processor (Apple says 2x faster), a better graphics chip (Apple says 9x faster) and more memory.

Oh, it has a couple of cameras, a rear-facing one for shooting photos and videos, and a front-facing camera for doing video chats. The iPad 1.1 comes in two versions, one for GSM wireless networks, the other for CDMA networks.

Apple calls this device the iPad 2. However, it’s a minor incremental upgrade, and should really have been called iPad 1.1 or maybe iPad 1.5. Beyond the cameras, there’s nothing especially new here. Same screen. Same range of installed storage, from 16GB to 64GB. Very similar pricing, starting at US$499. Minor tweaks to the operating system to add support for WiFi hotspots, media sharing, and a few other goodies.

Don’t mind my skepticism: I was one of those who preordered the iPad 1 last year, and truly love my tablet. I use my iPad every day. Of course, I also use my iPhone and HTC Evo Android phone every day, so perhaps I’m easily swayed by cool gadgets. Even so, this was one announcement from Cupertino that didn’t impress me much; my credit card stayed firmly planted in my wallet.

My guess – and it’s only a guess – is that Apple released this very incremental upgrade as a competitive reaction. It had to respond to the sudden proliferation of Android tablets like the Viewsonic gTablet or Archos 7 or Motorola Xoom. Offering the iPad 1.1 now offers Apple several benefits:

• It keeps the iPad in the news. Apple is a brilliant marketing company, knows how to stroke the media and sway public opinion.

• It addresses the single biggest area where Android devices had an clear advantage — cameras.

• It let Apple integrate a Verizon-compatible CDMA radio system without reworking the old hardware.

• The dual-core A5 chip and added RAM solves performance issues that prevented some bigger applications from running on the iPad, like iMovie.

• It maintains sales momentum and media attention until the real next-generation device (iPad 3) comes out, perhaps as early as this fall.

That said, let’s turn our thoughts to Android tablets, which will be helped significantly by the newly released Android 3 “Honeycomb.” Personally, I’m thinking about buying the Motorola Xoom — once the WiFi-only version comes out. (I do not need another wireless bill, thank you very much; that’s why I have a Novatel MiFi.)

Yes, gadgets are good, and having lots of tablets drives consumer choice, fuels competitive innovation and lowers pricing. But from the developer perspective, the unfettered explosion in form factors is a worry. What is that going to mean for hardware/software compatibility, third-party apps and building a huge ecosystem?

Apple’s tight control over the its own hardware makes it easy to know that a tablet-oriented iOS application will run on every iPad device, with only rare backward-compatible limitations. Similarly, Microsoft’s WinHEC {} and tight reins over its Windows logo program meant that you can be pretty much assured that any modern Windows application is going to run on any modern Windows device.

With the open-source Android platform, though, hardware makers are free to “innovate” without limitation – and that means more than just different screen sizes. You can have different processors, different amounts of RAM, different input technologies, different ports, different cameras and other peripherals, different power-management systems, different collections of libraries, different device drivers, different builds of Android itself… and nobody policing the hardware abstraction layers.

Already I have an issue with the Line2 telephone application, It’s “certified” for three Android models – and my HTC Evo isn’t one of them. Sometimes Line2 just doesn’t work well. The developer, Toktumi, says “Voice quality is a known issue on HTC EVO 4G (Sprint) phones.” Have some other Android device? The company says, “If you don’t see your device listed here you can still download Line2 and try it for free however we can’t guarantee performance quality on non-certified phones.”

That frustration may be the future of Android.

Many iPad customers are perfectly contented with the beautifully designed, carefully integrated hardware packages that Apple presents. Android represents the diametric opposite. Android is getting a lot of attention because it offers unfettered creativity and competitive differentiation. Let’s see what happens if that differentiation leads to fragmentation. If that happens, Apple’s carefully curated walled garden may begin to look more attractive.

It’s great to get out of the office and go to conferences, learn new stuff and blow the cobwebs away. And it’s even better to do it with a friend.

It’s great to get out of the office and go to conferences, learn new stuff and blow the cobwebs away. And it’s even better to do it with a friend.

About two or three times a year, I get the opportunity to attend a professional conference or convention. I’m not talking about those events that my company produces (like AnDevCon, coming up Mar. 7-9), or those which I cover as a journalist. Rather, I mean conferences when I’m an attendee – taking the classes and workshops, schmoozing with the faculty, grabbing a drink with friends, relaxing at an after-hours party, going to bed too late at night, and picking it up too early the next morning.

If the conference or convention doesn’t suck, I come back refreshed. Plus, I come back with lots of notes from sessions and conversation that result in thoughtful analysis, meetings and action items. Because of my role as the “Z” of BZ Media, often what I learn at professional conferences (both formally and informally) impacts the direction of our company, both at the tactical and strategic level.

Want an example? My work at BZ Media straddles the twin words of software development (we publish SD Times, and most of our readers are enterprise software developers) but also media. After all, we’re a magazine/website publisher and event producer, and the fundamentals of publishing and conference management are what we live with every day.

So, while I learn about trends in the subject matter we cover at events like Microsoft TechEd, Apple WWDC or Google I/O, I learn about trends in the publishing industry at events like the excellent Niche Magazine Conference, which was held Feb. 14-15 in Austin, Tex. (In fact, I taught a class there, and also moderated a roundtable on mobile devices like phones and tablets.)

What’s cool at the Niche Magazine Conference (and others like it) is comparing and contrasting what we do at SD Times with, say, a monthly magazine for home aquarium enthusiasts. Or a quarterly journal about expensive pens and hand-crafted writing paper. Or the regional business magazine for Bozeman, Montana. Or a healthcare professional’s magazine.

We have much in common, SD Times and Cheese Market News, the weekly newspaper of the nation’s cheese and dairy/deli business. Not the content, of course, or the specific advertisers that support the magazine – but just about everything else.

But what was missing is that this year, I attended the Niche Magazine Conference by myself. That’s fine – I met lots of people, and took lots of notes. But last time I attended, it was with a colleague. The result was exponentially more learning learning and better action items.

When you go to a conference with someone, sometimes you both attend the same parts, like a keynote or a general session. Magic happens when you get together at a quiet minute to compare notes. You heard that? That’s not how I interpreted that data. How can we use this? What do we do next? Got it!

Of course, you mustn’t always stick together. Whenever possible, divide and conquer. Breakouts mean breaking up, so you can cover twice the subject matter – and then get together later to compare notes. It’s astonishing how much more knowledge appears when you explain the high points of a class to a colleague, or even a room full of colleagues.

At social events, like parties or meals, resist the temptation to hang out together. That’s a value-subtractor, not a value-adder. You’ve got split up, and talk to new people. That’s the whole point.

Pair conferencing – going to a conference or convention with a colleague, sharing experiences. It’s much more than twice as good as going yourself. If you’re getting out of the office, go with a friend.

I never spent any time with towering figure behind Digital Equipment Corp., but I remember seeing Ken Olsen speak on several occasions. Sadly, the details of those talks are vague. But certainly the story of Kenneth H. Olsen, who died on Sunday, Feb. 6, at age 84, is the story of the pre-PC computer industry.

I never spent any time with towering figure behind Digital Equipment Corp., but I remember seeing Ken Olsen speak on several occasions. Sadly, the details of those talks are vague. But certainly the story of Kenneth H. Olsen, who died on Sunday, Feb. 6, at age 84, is the story of the pre-PC computer industry.

Olsen founded Digital Equipment Corp. in 1957 with $70,000 in seed money. From there, he turned it into one of the powerhouses of the computing industry. He took on International Business Machines on many fronts, and pummeled other minicomputer makers like Data General, Prime, Wang and others that circled around mighty IBM in the 1970s.

DEC’s computers were brilliant. While most of my own early computing career was centered on IBM 370-series mainframes, I also had access to smaller systems, such as DEC’s 16-bit PDP-11 and 32-bit VAX systems, for projects that focused more on scientific and mathematical computation instead of data processing. But even on the mainframe, I was as apt to access the system with a DECwriter II printing terminal as an IBM 3278 display terminal. I loved those old DECwriters.

And of course, anyone who worked in computing during that era has read Tracy Kidder’s “The Soul of a New Machine,” which chronicled attempts by Data General engineers to build a computer to compete against DEC’s mighty VAX. If you haven’t read it, you should, to get a flavor of that wonderful era of glass-house innovation.

Ken Olsen left DEC in 1992 – nearly 20 years ago – amid the company’s faltering fortunes. Despite its advances with RISC chips like the Alpha, DEC was already turning into a living fossil. The company’s demise came only a few years later, when Compaq purchased DEC in 1998. The minicomputer era was officially over.

We owe Kenneth H. Olsen a tremendous debt for creating Digital Equipment Corp., one of the greatest innovators in the high-tech industry.

It’s a bird! It’s a plane! It’s Super-Geek!

It’s a bird! It’s a plane! It’s Super-Geek!

Like many techies, my self esteem is pretty high. There’s no run-of-the-mill tech problem I can’t solve. Networking? Operating systems? Application crashes? Crazy error messages? Bring ‘em on, says Alan “MacGyver” Zeichick, a veritable St. George ready to slay every virtual I.T. dragon.

There’s one category of problems that MacGyver can’t solve: Epic failures caused by my own epic stupidity. The most recent example wasted time, wasted money – and demonstrated that software problems aren’t necessarily software problems. Let my sad story serve as a cautionary tale for other Super-Geeks.

Until recently, my everyday computer was an Apple 15” MacBook Pro (Mid 2007). However, starting last summer, it began driving me crazy with frequent pauses (like it would ignore all keyboard and mouse input for a few seconds), occasional hard crashes, and lots of spinning beachballs that seized up the whole computer for several minutes at a time.

System diagnostics didn’t show anything. Swapping out the memory didn’t help. After one particularly nasty day of constant beachballs last fall, I purchased a new hard drive, installed a clean copy of the operating system and restored my backed-up data. Nope. Didn’t help either.

With the constant pauses and beachballs affecting both my productivity and my sanity, the only conclusion was that the computer was doomed. Yes, I was forced to buy a new 13” MacBook Air. That was back in November, after the sexy new models came out. (As you might guess, I wasn’t too upset by this.)

Meanwhile, since the MacBook Pro still kinda sorta worked, I relegated it to a side table next to my desk. It found new life as a second machine – even with its little pauses, beachballs and occasional crashes, it’s a fast computer with a big display, ideal for running Parallels and doing other assorted tasks.

Last week, however, it was driving me crazy again. One day after school, I shared my frustration with my teenage son. As he watched it pause and beachball, he asked, “Maybe it’s the power supply?”

“Don’t be silly,” I replied. “That’s the power supply I’ve always used with it… oh, hmm, okay, let’s check.”

A quick moment’s study showed that the little white brick that I’ve been using with the MacBook Pro is a 60-watt power supply. That’s the wrong one. The correct power supply, according to Apple’s specs for this model, is a nearly identical 85-watt little white brick – which lived in my briefcase for road trips.

Come to think of it, the MacBook Pro never paused, beachballed or crashed when I was traveling or running on batteries. It only misbehaved in my office. With the too-small power supply. Yup. There we go.

We swapped the power supplies, and you know, the MacBook Pro runs like a champ. It’s like a brand-new computer. Not a pause, not a beachball, not a crash.

Don’t I feel stupid. I’m mildly annoyed at Apple, who made its incompatible power supplies look identical and use the same plug. You’d think the machine would notice that it was being fed by 16.5 volts, 3.65 amps instead of 18.5 volts, 4.6 amps — and would pop up an error message saying, “Wrong power supply, you dummy.” It doesn’t. But let’s be honest, there’s nobody here to blame but myself.

The temporarily humbled Super-Geek offers two morals to this story.

First: When you’re debugging problems with your systems – even if they look like software problems – it may be the hardware. In fact, it may be hardware that isn’t computational hardware. I’ve seen systems have intermittent issues caused by dirty cooling fans, or dust on the motherboard. It’s amazing what a blast of compressed air can do for solving hardware issues. And of course, power supplies are notorious for causing intermittent problems. I truly should have thought of this myself.

Second: When you’re stymied by a tech problem, try demonstrating it to someone else. By looking at the issue with fresh eyes, they might instantly produce an answer that you’ll never find.

And yes, my son did me proud.

On January 27, Microsoft released its latest quarterly financial reports, showing that its net income was US$6.6 billion – more than 10% above analyst estimates. Total quarterly revenue was $19.95 billion, compared to analyst estimates of $19.2 billion. This represented significant growth from the same quarter a year earlier.

On January 27, Microsoft released its latest quarterly financial reports, showing that its net income was US$6.6 billion – more than 10% above analyst estimates. Total quarterly revenue was $19.95 billion, compared to analyst estimates of $19.2 billion. This represented significant growth from the same quarter a year earlier.

When listening to the financial news that morning on radio station WCBS in New York, a Wall Street analyst described the House that Gates Built as “stodgy old Microsoft.”

The analyst (I was driving, and didn’t catch his name) went on to say that much of the profit increase can be attributed to the Kinect, an add-on to the Xbox game system – a major shift, he said, from the company’s overwhelmingly enterprise-oriented business model.

What a change that is. A couple of decades ago, analysts – myself included – said that Microsoft’s weakness was that it didn’t know how to play in the enterprise market, which was dominated by IBM.

In fact, not long ago, Microsoft was a red-hot, innovative technology company that was going to take over the world. Stodgy meant IBM.

How times change. Microsoft isn’t seen as consumer-oriented or innovative. It’s stodgy, says the analyst. I’ve heard people talk about Google as a has-been, and everyone wants to work for Facebook. But then people are starting to say that Facebook has “peaked,” and that the world belongs to some next generation of innovators.

Okay, let’s have some fun. I’ve set up an online survey. It’s not scientific, and it’s not statistically significant. But it asks about a whole bunch of big software development companies and organizations – and whether you think they’re stodgy or not. That’s all.

If you get a minute, please take the survey. If there are enough responses, I’ll report back in a few weeks.

Proprietary multimedia formats annoy me. I don’t care if it’s DOCX or SWF or WMV or H.264 or CR2. I don’t like them, and wish they’d go away. Or at least, I wish they’d stay off the public Internet.

Proprietary multimedia formats annoy me. I don’t care if it’s DOCX or SWF or WMV or H.264 or CR2. I don’t like them, and wish they’d go away. Or at least, I wish they’d stay off the public Internet.

Software developers should not have to pay to be able to read or write data in a specific file format – or sign a license agreement.

Web developers and website owners should not have to choose file formats based on researching which browser makers have licensed which codecs.

I’m not talking about specific implementation of formats, whether it’s for documents, spreadsheets, audio files or multimedia files. I’m all in favor of both open-source and closed-source implementations of codecs and file interpreters. Rather, I’m talking about the openness of the file format itself.

As we increasingly encode vast quantities of data into digital files, we shouldn’t have to worry that the data itself is in a format owned by or controlled by a commercial entity. We should note cede control over which software can read and write data in those formats.

And we shouldn’t be forced to chose between “official” codecs vs. reverse-engineered codecs that may (or may not) be able to read the data correctly.

Take one file format close to my heart: CR2.That’s Canon’s proprietary file format for the raw images taken by its digital cameras.

Canon does not license the CR2 format to other companies. Therefore, when working with raw images, I must choose between Canon’s Digital Photo Professional picture-editing software, which can read CR2 but which I think is pretty poor, or top-shelf photo editing and workflow software like Adobe’s Lightroom and Photoshop (both of which I use) and Apple’s Aperture.

While Apple and Adobe did an excellent job reverse-engineering the CR2 file format, neither company can read and interpret the image-sensor data 100% accurately, and some image metadata is not available to their software.

This cheeses me off.

(Canon is not alone; Nikon similarly doesn’t license its raw image format, NEF.)

Not into cameras? We’re watching a bigger drama play out with Internet multimedia formats. It’s a huge drama, actually, since it affects many websites and, of course, rich media playing on mobile devices.

The development of HTML5 has been hampered because of the patent-protected nature of many of its component formats. One of its key components is the H.264 codec, developed by the Motion Pictures Expert Group. H.264, also known as MPEG-4 AVC, is widely known and used by many Internet developers, but it’s patented and must be licensed – which has been the basis of lawsuits.

Recently, Google decided to drop H.264 from its Chrome browser in favor of WebM, a newer specification that it’s been developing. WebM will be the core multimedia format on Android.

As I understanding it (see “FSF throws support behind WebM codec”), the word on the street is that WebM is pretty open. If that’s the case, then I support it. And I hope you do too – and wherever possible, shun any file format that’s restricted by patents.

Now, if only the fine folks at Canon (and Nikon) would understand that freeing their proprietary image file formats – and thus improving the ability of software like Lightroom, Photoshop and Aperture to handle their images – would be a competitive advantage, we’d all be better off.

Winter time, Long Island, New York. Storm after storm means office closures, lost productivity, missed ship dates.

Winter time, Long Island, New York. Storm after storm means office closures, lost productivity, missed ship dates.

Visiting SD Times’ New York office this week, I enjoyed a few decent snowfalls. That reminded me of why I moved from New England to San Francisco back in 1990… our New York office is a nice place, but I wouldn’t want to spend the winter here.

Listening to the radio this morning after about a foot of snow fell, an analyst (I forget who) said that when employees work from home because they can’t get to the office, they work at approximately 80% efficiency.

Of course, that’s only for some types of workers. For the most part, that would include software developers, especially if they have access to a powerful laptop or desktop computer at home, and have either brought work home or can access their files over the Internet. (That’s where the cloud can come in handy.)

By contrast, some type of workers can’t be efficient at all when working at home. Sorry, gas station attendant, mail-room clerk, operating-room nurse, restaurant chef, train driver, firefighter, produce manager, or developer without access to a computer: You’re not 80% as valuable to your employer if you’re snowed in.

Let’s think about that 80% figure. In some ways, brain-centric workers like programmers should be more productive when working at home – unless, of course, they’re having to manage having their children home from school as well (snow day), or unless they don’t have access to their tools. Why more productive? No meetings, for a start. No loss of time for the commute. No wasted time around the proverbial water cooler.

On the other hand, there are a myriad distractions at home. The television. The fridge. The temptation to go back to bed for a nap. Pets. The fridge. Deciding to run some errands after the streets are cleared. Building a snowman. The fridge. And so-on.

And on the long term, of course, an organization that’s geared around having developers in an office may not be as efficient during a snow day as an organization that’s heavily Internet-centric, cloud-centric, and distributed-development-centric. In the short term, though, we should be able to manage.

How efficient is your organization when your employees can’t get to the office? Are there steps you can take to prepare for that contingency? And might your developers benefit from having an occasional planned “snow day” every so often, so they can work at home, heads-down in a change of venue, without meetings and socialization? Or would the lure of the fridge be too tempting?

Larry Page is taking over the command chair of the Starship Google. He replaces Eric Schmidt in the CEO role, who was brought in a decade ago to provide adult supervision, and who moves into an advisory position as executive chairman.

Larry Page is taking over the command chair of the Starship Google. He replaces Eric Schmidt in the CEO role, who was brought in a decade ago to provide adult supervision, and who moves into an advisory position as executive chairman.

Before he joined Google, Schmidt was already a legend in the computer industry. He was praised for his work at Xerox PARC, Sun and Novell, renowned for both his engineering skills and business acumen.

Hiring Schmidt as CEO back in 2001 was the smartest move that the brilliant, but immature, Google co-founders Page and Sergey Brin could have done. Page and Brin constantly pushed the envelope of technology – they didn’t know what couldn’t be done, and so they did it anyway. Schmidt make it all work by doing all those pesky details that entrepreneurs don’t want to do

You can read Schmidt’s blog post about this change, where he says,

But as Google has grown, managing the business has become more complicated. So Larry, Sergey and I have been talking for a long time about how best to simplify our management structure and speed up decision making—and over the holidays we decided now was the right moment to make some changes to the way we are structured.

Now, I don’t know the real story, but of course, it’s never as simple as that. Did Schmidt want to step back and focus on other interests? Was Page feeling that after a decade of the older engineer’s tutelage, he was now ready for his moment in the spotlight? We’ll probably never know.

I’m concerned about what this change means for Google. When you move beyond its core business of search, AdSense and AdWords, the company feels incredibly scattered, running in all directions at once.

Yes, Google has got an incredible success in Android, but it’s unclear how it helps the bottom line. Ditto for the fast-rising Chrome browser and Chrome OS. What about book archives? Knol? Free office suites and email hosting? YouTube? Self-driving cars? Wave and Buzz? Print ads? Street View mapping? Web services and cloud computing? Social networking? Blog engines? Orkut? Internet phone calls? Photo sharing? Health records? What are they doing at the Googleplex?

In short, although Google makes tons and money and gobs of profit, the company lacks focus. Now, here are the questions:

1. Does the lack of focus come from Schmidt? Doubtful.

2. Does the lack of focus come from Page and Brin? Probably.

3. Was Schmidt able to rein in Page and Brin? Maybe at first, but it seems unlikely.

4. Was Schmidt feeling frustrated? Well, if my premise is correct, who wouldn’t be?

My guess is Schmidt threw up his hands. I wonder how Google will run without his adult supervision… but if my theory is correct, it’s been pretty much that way already for the past few years.

Look at all the wonderful new toys announced last week at the annual Consumer Electronics Show in Las Vegas. Tablet computers and lots of things running Android. Internet-connected televisions that have browsers and apps, such as Samsung’s Smart TV. A new app-store model for OS X, that is, Apple’s Macintosh notebook and desktop computers. Lots and lots of new things.

Look at all the wonderful new toys announced last week at the annual Consumer Electronics Show in Las Vegas. Tablet computers and lots of things running Android. Internet-connected televisions that have browsers and apps, such as Samsung’s Smart TV. A new app-store model for OS X, that is, Apple’s Macintosh notebook and desktop computers. Lots and lots of new things.

For consumers, this represents not only innovation, but also really tough choices. Now, when you choose, let’s say, a television, you can’t just look at size, picture quality, sound quality and price. If you want the latest in technology, you also have to see how well the television does on the Internet (i.e., its internal software), and decide whether you believe that the company’s app-store model will attract developers.

It’s worse if you’re an enterprise development manager, or an executive charged with choosing and funding app-dev projects. (ISVs can simply choose to pick popular favorites.) If you work for a media company, let’s say, or a retail chain, or a bank, or a government agency, you’re looking at massive fragmentation. Which platforms to build for right now? Which to ignore? Which to handle with a wait-and-see attitude? Fragmentation is painful.

Not only that, but there are real questions about how to develop for all these platforms. Native tools often provide the best results but require a large investment in training and technology, and it’s difficult to ensure feature parity. It’s also hard to balance the need to have native-looking user experiences with having a consistent approach across all platforms.

The other big choices are multi-platform frameworks. They can simplify development, but often with a least-common-denominator approach. You’re also at the mercy of those frameworks’ developers in terms of which platforms they support and how robustly they support each platform. We are experiencing fragmentation there too: I’ve lost track of how many frameworks there are, both open-source and proprietary, vying for developers’ attention.

Once you’ve written your app, now you have to figure out how to distribute it. Forget shipping CD-ROMs. Now you need to hook up with and manage your software’s presence in any number of different app stores, some by hardware makers, others by carriers, some by third parties. Each app store has its own rules, technical requirements and payment model.

Ouch.

This new world of platform fragmentation is a far cry from the not-too-distant past, when we had far fewer choices—or in some cases, no choices. Building desktop software for business users? Win32. Designing a website? Make sure it runs on Internet Explorer, and maybe Firefox. Writing a rich Internet application? Flash. Creating a server application? Choose Java, Win32, or native code for Linux or Unix.

I feel bad for consumers. I really do. There are too many choices, and it’s unclear which will be viable and which will crash and burn. The explosion of app platforms is reminiscent of the VHS vs. Betamax wars of the 1970s, or the more recent Blu-ray vs. HD DVD battles of the late 2000s—only with even more players. You can’t always tell who’s going to be the winner, and first-mover advantage doesn’t guarantee victory: Betamax came out first, for example, but was trounced by VHS.

But I feel worse for enterprises that need to have a strong presence across the non-desktop screen. Each one of these platforms requires education—and then hard choices, as well as investments that may not pay off. Yes, innovation is good and options are wonderful. But it will be better still when things settle back down again.

What a year that just past in 2010 – and what a year we can look forward to. Can you believe that the iPad only came out last year? That Oracle hasn’t always owned Sun and Java? That Microsoft used to be a powerhouse in consumer technologies? That Google used to be a search engine, and Amazon was an online bookstore?

What a year that just past in 2010 – and what a year we can look forward to. Can you believe that the iPad only came out last year? That Oracle hasn’t always owned Sun and Java? That Microsoft used to be a powerhouse in consumer technologies? That Google used to be a search engine, and Amazon was an online bookstore?

As we’ve done since 2001, the editors of SD Times had a good time assembling a collection of year-in-review stories. You can find them here:

Year in Review: Overview

Year in Review: Agile

Year in Review: ALM

Year in Review: Java

Year in Review: Mergers and Acquisitions

Year in Review: Microsoft

Year in Review: Mobile

Year in Review: Open Source

But let’s look back even farther. In our Jan. 1, 2001, issue of SD Times, I wrote the introduction to our first “Year in Review” retrospective. Let’s see what I wrote about 2000, under the headline, “XML, Dot-Coms, Microsoft Dominate Headlines.” (Bear in mind that the introductory sentence was written ten months before the events of Sept. 11, 2001. It was a reference to unfounded Y2K hyperbole. But rereading it today: Yikes!)

The year 2000 came in with a whimper—no crashing airplanes, no massive failures of the power grid—and ended with a peculiar type of bang, as the U.S. election woes turned a few hundred Floridians and a few thousand lawyers into the de facto electors for the next Leader of the Free World.

Outside of the Tallahassee, Fla., and Washington, D.C., courtrooms, life was tamer, but only slightly. For software developers, the world was turned upside down by these top stories of the year:

XML Rules. The Extensible Markup Language came into its own in 2000. Forget about HTML and Web browsers: The name of the game is business-to-business commerce and platform-independent information exchange using XML schemas. Three years ago, everything had to be Web-enabled; now, it must be able to speak XML.

Bye, Bye, Billions. The second-quarter dot-com crash, coming after the Nasdaq stock exchange passed 5,000 in March, did more than turn a few billionaires into millionaires, and drive a few poorly conceived Web sites out of business. It also made it harder for new ideas to get funding.

The plummeting of share prices and the delay of IPOs put stock options under water, making it hard for many companies to attract technical talent. Profits, rather than technology, re-emerged as the best metric for valuing investments. The mood by the end of 2000 was gloomy on Wall Street and in Silicon Valley.

Whither Microsoft? Well, it’s back to lawyers. The antitrust trial ended with judgments against Microsoft Corp., and with a decision to break up the world’s largest software company. But as appeals began their lengthy process, there was no certainty as to what changes would occur in Microsoft’s business practices.

Many would agree that Microsoft’s influence appeared to have diminished by the end of the year, but the company still wields incredible market and technological influence — and has massive cash reserves. Although wounded and distracted, Microsoft is still king of the hill.

Nothing But .NET. As if to underscore that point, Microsoft unveiled its .NET initiative, designed to support true distributed computing over the Internet, enabled by its COM+ distributed component model and middleware, its XML-based BizTalk server and a new programming language, C#.

The first parts of the .NET system were released in the fourth quarter, along with betas of a few development tools. The importance of .NET, and whether it will allow Microsoft to dominate the business-to-business Internet, remains to be seen.

Wireless: The Next XML. Software tools vendors and enterprises alike went gaga over the Wireless Access Protocol, seeing the mobile-commerce market as the next great opportunity, and many application- server vendors repositioned their companies to bet the business on WAP.

Early reports from customers about poor quality of service, however, led some analysts to wonder if this huge untamed beast is actually a white elephant.

On the other hand, Bluetooth, a short-range wireless specification for embedded devices, achieved widespread industry support.

UML Is the Best Model. This past year saw a proliferation of new Unified Modeling Language-based design, analysis and coding tools. A drive to formal modeling has been a long time coming, but given the complexity of modern software engineering, the increased cost of hiring developers and the new business imperatives for delivering software on time, there’s no doubt that modeling is here to stay.

I’m having an identity crisis. Please feel free to join me.

I’m having an identity crisis. Please feel free to join me.

When I wake up my laptop, it asks for a username and password. The right answer provides access not only to the machine and its locally stored applications and data, but also my keychain of stored website passwords. The same is true with my smartphone: I provide a short numeric password and have access to my stored data and configured applications – which include cached passwords for email and various online services.

So, you might say, on a fundamental level my digital identity is absolutely tied to a few specific edge devices that I possess, carry around with me, and try hard not to lose.

Yet on another level, my identity lives in the cloud. Whether it’s Facebook, Twitter, LinkedIn, Google Documents, Windows Live, Netflix, Amazon.com, Salesforce.com, Dropbox, the SD Times editorial wiki, this blog or elsewhere, my identity is in cyberspace. It’s accessible from any machine’s applications, via any browser and even APIs.

Therefore, my digital identity simultaneously has absolutely nothing to do with whichever edge devices I’m using today.

This thought occurred to me when meeting with PowerCloud, a Xerox PARC spinoff that’s building a cloud-based authentication system for small business networks. In effect, infrastructure devices like routers and switches are registered with the PowerCloud system, and are programmed to only allow authorized edge devices (laptops, desktops, smartphones, network printers) to connect to the LAN. It’s a clever system that not only improves network security but also simplifies network configuration.

The whole PowerCloud system is based on authenticating specific devices based on either their MAC address (for the edge device) or a firmware token (for the infrastructure device). The system doesn’t care who is using the hardware; if it’s not authorized it can’t connect. That’s very different, of course, than how most of us view the Web, where it’s all about username and password. But it’s the way that the invisible world works. For example, your phone is authenticated to the mobile network based on an electronic serial number baked into the phone or a removable SIM card — not based on phone number or your unlock password.

The best security schemes involve something that you have (a device, a fingerprint or other physical token) and something that you know (a password or passphrase). But what does that mean for identity? Am I user “alan” on my laptop? Or am I user 132588 on LinkedIn?

Who am I? And does it even matter?

Some software developers manage without 1,3,7-trimethyl-1H-purine-2,6(3H,7H)-dione. I have no idea how they do it. Haven’t they read the requirements document, which clearly states that all IT professionals must consistently consume massive quantities of caffeine at all times?

Some software developers manage without 1,3,7-trimethyl-1H-purine-2,6(3H,7H)-dione. I have no idea how they do it. Haven’t they read the requirements document, which clearly states that all IT professionals must consistently consume massive quantities of caffeine at all times?

How can you be agile without coffee? My apologies, but tea, hot chocolate, Diet Coke and Mr Pibb simply don’t cut it. And don’t get me started about Dr Pepper. There’s got to be something in the Carnegie-Mellon CMMI about caffeine.

As part of the lead-up to last September’s iPhone/iPad DevCon in San Diego, we undertook a survey into our attendees’ favorite coffee spots. This is clearly a North American-centric survey, and we make no claims as to its statistical validity. However, we learned that (shudder) most of our attendees prefer Starbucks.

Starbucks: 53.2%

Dunkin’ Donuts: 10.5%

Peet’s: 9.7%

Caribou Coffee: 5.6%

Tim Horton’s: 3.2%

Coffee Bean & Tea Leaf: 1.6%

Seattle’s Best: 0.8%

The good news is that Dunkin’ Donuts came in second, albeit a not-very-close second, narrowly edging out Peet’s, a small chain that originated Berkeley, Calif.

I’ve never visited Caribou Coffee, which operates in the eastern and mid-west areas of the United States, but it fared reasonably well, followed by the Canadian donut chain Tim Horton’s, and two other chains, Coffee Bean and Seattle’s Best.

Surprisingly, five chains we had on our survey received zero responses: Tully’s, Coffee Republic, Port City Java, Coffee Beanery and McDonalds McCafe. Yes, the Golden Arches is reinventing itself as a coffee shop, complete with free WiFi. No, mobile developers don’t care.

No, we did not ask how many attendees don’t consume coffee at all.