As I write this on Friday, Apr. 19, it’s been a rough week. A tragic week. Boston is on lockdown, as the hunt for the suspected Boston Marathon bombers continues. Explosion at a fertilizer plant in Texas. Killings in Syria. Suicide bombings in Iraq. And much more besides.

As I write this on Friday, Apr. 19, it’s been a rough week. A tragic week. Boston is on lockdown, as the hunt for the suspected Boston Marathon bombers continues. Explosion at a fertilizer plant in Texas. Killings in Syria. Suicide bombings in Iraq. And much more besides.

The Boston incident struck me hard. Not only as a native New Englander who loves that city, and not only because I have so many friends and family there, but also because I was near Copley Square only a week earlier. My heart goes out to all of the past week’s victims, in Boston and worldwide.

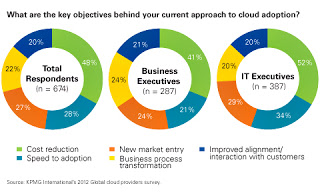

Changing the subject entirely: I’d like to share some data compiled by Black Duck Software and North Bridge Venture Partners. This is their seventh annual report about open source software (OSS) adoption. The notes are analysis from Black Duck and North Bridge.

How important will the following trends be for open source over the next 2-3 years?

#1 Innovation (88.6%)

#2 Knowledge and Culture in Academia (86.4%)

#3 Adoption of OSS into non-technical segments (86.3%)

#4 OSS Development methods adopted inside businesses (79.3%)

#5 Increased awareness of OSS by consumers (71.9%)

#6 Growth of industry specific communities (63.3%)

Note: Over 86% of respondents ranked Innovation and Knowledge and Culture of OSS in Academia as important/very important.

How important are the following factors to the adoption and use of open source? Ranked in response order:

#1 – Better Quality

#2 – Freedom from vendor lock-in

#3 – Flexibility, access to libraries of software, extensions, add-ons

#4 – Elasticity, ability to scale at little cost or penalty

#5 – Superior security

#6 – Pace of innovation

#7 – Lower costs

#8 – Access to source code

Note: Quality jumped to #1 this year, from third place in 2012.

How important are the following factors when choosing between using open source and proprietary alternatives? Ranked in response order:

#1 – Competitive features/technical capabilities

#2 – Security concerns

#3 – Cost of ownership

#4 – Internal technical skills

#5 – Familiarity with OSS Solutions

#6 – Deployment complexity

#7 – Legal concerns about licensing

Note: A surprising result was “Formal Commercial Vendor Support” was ranked as the least important factor – 12% of respondents ranked it as unimportant. Support has traditionally been held as an important requirement by large IT organizations, with awareness of OSS rising, the requirement is rapidly diminishing.

When hiring new software developers, how important are the following aspects of open source experience? Ranked in response order:

2012

#1 – Variety of projects

#2 – Code contributions

#3 – Experience with major projects

#4 – Experience as a committer

#5 – Community management experience

2013

#1 – Experience with relevant/specific projects

#2 – Code contributions

#3 – Experience with a variety of projects

#4 – Experience as a committer

#5 – Community management experience

Note: The 2013 results signal a shift to “deep vs. broad experience” where respondents are most interested in specific OSS project experience vs. a variety of projects, which was #1 in 2012.

There is a lot more data in the Future of Open Source 2013 survey. Go check it out.

Today’s word is “open.” What does open mean in terms of open platforms and open standards? It’s a tricky concept. Is Windows more open than Mac OS X? Is Linux more open than Solaris? Is Android more open than iOS? Is the Java language more open than C#? Is Firefox more open than Chrome? Is SQL Server more open than DB2?

Today’s word is “open.” What does open mean in terms of open platforms and open standards? It’s a tricky concept. Is Windows more open than Mac OS X? Is Linux more open than Solaris? Is Android more open than iOS? Is the Java language more open than C#? Is Firefox more open than Chrome? Is SQL Server more open than DB2? In 1996, according to the Wikipedia,

In 1996, according to the Wikipedia,

Echosystem. What a marvelous typo! An email from an analyst firm referred several times to a particular software development ecosystem, but in one of the instances, she misspelled “ecosystem” as “echosystem.” As a technology writer and analyst myself, that misspelling immediately set my mind racing. Echosystem. I love it.

Echosystem. What a marvelous typo! An email from an analyst firm referred several times to a particular software development ecosystem, but in one of the instances, she misspelled “ecosystem” as “echosystem.” As a technology writer and analyst myself, that misspelling immediately set my mind racing. Echosystem. I love it. It take a lot to push the U.S. elections off the television screen, but Hurricane Sandy managed the trick. We would like to express our sympathies to those affected by the storm – too many lives were lost, homes and property destroyed, businesses closed.

It take a lot to push the U.S. elections off the television screen, but Hurricane Sandy managed the trick. We would like to express our sympathies to those affected by the storm – too many lives were lost, homes and property destroyed, businesses closed.