“My name is Patricia from the Bank of America fraud prevention department. This important message is for Mr. Alan Zeichick. We are calling to verify some potentially suspicious activity on your account. It is very important that we speak with you.”

“My name is Patricia from the Bank of America fraud prevention department. This important message is for Mr. Alan Zeichick. We are calling to verify some potentially suspicious activity on your account. It is very important that we speak with you.”

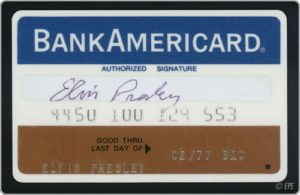

Tuesday’s voicemail from my bank was short and simple. Nobody had pilfered a credit-card receipt or hacked into my account, the representative told me during our conversation. Rather, BofA had been notified by Visa (the credit card clearinghouse) that a retailer had been hacked, and many credit card numbers were stolen. Including mine. As of right now, my card was frozen; the bank will issue me a new card with a new number.

Who was the merchant? According to the BofA representative, Visa didn’t divulge that information due to an ongoing investigation. Nor did the representative know how many credit card numbers were stolen; all she knew what was that BofA was given a list of their bank’s customers who were affected.

These stories are coming far too often. Millions of cards were stolen in 2014 from diverse merchants like P.F. Chang’s China Bistro (a restaurant chain), Michaels Stores (art supplies), Sally Beauty (cosmetics), and Shaws (grocery stores). And those are only a few of the major vendors. Who knows how many smaller card thefts are either never reported, or aren’t deemed sufficiently juicy by the news media?

Some of you might be thinking, “We don’t take credit card numbers on our websites, so there’s no potential risk exposure.” Wrong. I am frequently astonished by the number of companies that maintain lots of customer data, and have that data pilfered. The Payment Card Industry (PCI) standards say that you should never store customer payment information. We’ve all seen that those standards are not followed, sometimes intentionally through neglect, and sometimes through flawed architecture, bad coding or lousy testing.

Let’s be clear: Encrypting browser communications does not protect your customers’ personal or financial information. If you are storing that information anywhere—in your data center, in the cloud—it is at real risk. The threats are active. Are your countermeasures active?

What is even more astonishing is that many of these thefts are of personal information stored on employees’ laptops. You may recall a high-profile case in 2013, where nearly 840,000 Horizon Blue Cross Blue Shield customers had their information compromised when two laptops were stolen from the New Jersey-based health insurance company.

To quote from the Star Ledger’s story,

The stolen laptops were password-protected but had unencrypted data, Horizon said in a statement today. A subsequent investigation determined the computers may have contained files with personal information, including names, addresses, dates of birth, and, in some instances, Social Security numbers and limited clinical information, the insurer said.

How is that possible? No possible scenario should allow customer information to be downloaded onto a desktop or laptop or tablet or phone. Ever. Encrypted or not, the data should never leave the server.

Please tell me you aren’t storing credit card info in files that can be stolen. Please tell me your company has actively sought to ensure that customer information can never ever ever be downloaded from servers.

Data theft is a nuisance, for cardholders like me, and for businesses like yours. Do you protect your customers’ information?

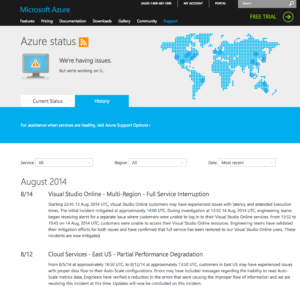

Cloud-based development tools are great. Until they don’t work.

Cloud-based development tools are great. Until they don’t work. We drove slightly more than 2,500 miles (4,000 kilometers), my wife and I, during a weeklong holiday. We explored different states in the western United States: Arizona (where we live), Colorado, New Mexico and Wyoming. The Rocky Mountains are incredible. Most of our vacation was at altitudes above 6,000 feet (1,800 meters). Many of the mountain peaks were above 14,000 feet (4,200 meters), and one road went above 11,000 feet (3,300 meters). Exciting!

We drove slightly more than 2,500 miles (4,000 kilometers), my wife and I, during a weeklong holiday. We explored different states in the western United States: Arizona (where we live), Colorado, New Mexico and Wyoming. The Rocky Mountains are incredible. Most of our vacation was at altitudes above 6,000 feet (1,800 meters). Many of the mountain peaks were above 14,000 feet (4,200 meters), and one road went above 11,000 feet (3,300 meters). Exciting! Where do your employees go to find shared data? If it’s external data, probably an external search engine, like Google (

Where do your employees go to find shared data? If it’s external data, probably an external search engine, like Google ( Microsoft has evolved considerably. It’s moved from its early days selling developer tools, or its era focusing on Windows and Office, or its run as a server software maker, or its first iteration as a cloud/online services company. Despite all the myriad changes, it’s always been true that Microsoft does not excel at innovation.

Microsoft has evolved considerably. It’s moved from its early days selling developer tools, or its era focusing on Windows and Office, or its run as a server software maker, or its first iteration as a cloud/online services company. Despite all the myriad changes, it’s always been true that Microsoft does not excel at innovation. If your developers aren’t enrolled in developer relations programs, they will grow old and stale. They will become moldy. They will pine for the Good Old Days and opine endlessly about the irrelevance of new tools, new platforms, new paradigms and new ideas. No matter their brilliance today, they will become obsolescent.

If your developers aren’t enrolled in developer relations programs, they will grow old and stale. They will become moldy. They will pine for the Good Old Days and opine endlessly about the irrelevance of new tools, new platforms, new paradigms and new ideas. No matter their brilliance today, they will become obsolescent. GOOGLE I/O 2004, SAN FRANCISCO — What is Android? It’s hard to know these days, and I’m not sure if that’s good or not. We all know what happened when Microsoft began seeing Windows as a common operating system for everything from embedded systems to desktops to phones to servers. By trying to be reasonably good at everything, Windows lost its way and ceased being the best platform for anything.

GOOGLE I/O 2004, SAN FRANCISCO — What is Android? It’s hard to know these days, and I’m not sure if that’s good or not. We all know what happened when Microsoft began seeing Windows as a common operating system for everything from embedded systems to desktops to phones to servers. By trying to be reasonably good at everything, Windows lost its way and ceased being the best platform for anything. Are you covered by a non-compete agreement at your current employer? Are your workers covered by a non-compete? While non-competes may make your executives (and their attorneys) feel good, they may not be good for your company.

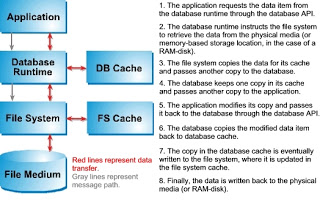

Are you covered by a non-compete agreement at your current employer? Are your workers covered by a non-compete? While non-competes may make your executives (and their attorneys) feel good, they may not be good for your company. Two consulting projects this year have involved lots and lots of data. One was the migration of a very complex customer database and transaction logging system to a cloud-based CRM platform from a homegrown system. The other involved performing serious analytics on a non-profit’s membership system that had data spanning decades.

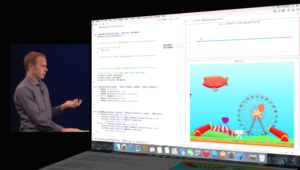

Two consulting projects this year have involved lots and lots of data. One was the migration of a very complex customer database and transaction logging system to a cloud-based CRM platform from a homegrown system. The other involved performing serious analytics on a non-profit’s membership system that had data spanning decades. SAN FRANCISCO — I expected a new version of OS X, the operating system for Mac desktops and notebooks. I expected a new version of iOS, the operating system for iPhones and iPads. I did not expect a new programming language. Yet that’s what we got at Apple’s

SAN FRANCISCO — I expected a new version of OS X, the operating system for Mac desktops and notebooks. I expected a new version of iOS, the operating system for iPhones and iPads. I did not expect a new programming language. Yet that’s what we got at Apple’s  There are lots of reasons to use

There are lots of reasons to use  South San Francisco, California — Writing software would be oh, so much simpler if we didn’t have all those darned choices. HTML5 or native apps? Windows Server in the data center or Windows Azure in the cloud? Which Linux distro? Java or C#? Continuous Integration? Continuous Delivery? Git or Subversion or both? NoSQL? Which APIs? Node.js? Follow-the-sun?

South San Francisco, California — Writing software would be oh, so much simpler if we didn’t have all those darned choices. HTML5 or native apps? Windows Server in the data center or Windows Azure in the cloud? Which Linux distro? Java or C#? Continuous Integration? Continuous Delivery? Git or Subversion or both? NoSQL? Which APIs? Node.js? Follow-the-sun? a billion dollars? Software companies, both startups and established firms, are selling like hotcakes. Some are selling for millions of U.S. dollars. Some are selling for billions. While the bulk of the sales price often goes back to venture financiers, a sale can be sweet for equity-holding employees, and even for non-equity employees who get a bonus. Hurray for stock options!

a billion dollars? Software companies, both startups and established firms, are selling like hotcakes. Some are selling for millions of U.S. dollars. Some are selling for billions. While the bulk of the sales price often goes back to venture financiers, a sale can be sweet for equity-holding employees, and even for non-equity employees who get a bonus. Hurray for stock options! “I tried working for some tech companies like Microsoft, Tektronix, IBM, and Intel. What a fiasco. I can’t count how many young men with way less experience and skills than me snagged the good fun hands-on tech jobs, while I got stuck doing some kind of crap customer service job. I still remember this guy who got hired as a desktop technician. He was in his 30s, but in bad health, always red and sweaty and breathing hard. It took him forever to do the simplest task, like connecting a monitor or printer. He didn’t know much and was usually wrong, but he kept his job. I busted my butt to show I was serious and already had a good skill set, and would work my tail off to excel, and they couldn’t see past that I wasn’t male. So I got the message, mentally told them to eff off and stuck with freelancing.”

“I tried working for some tech companies like Microsoft, Tektronix, IBM, and Intel. What a fiasco. I can’t count how many young men with way less experience and skills than me snagged the good fun hands-on tech jobs, while I got stuck doing some kind of crap customer service job. I still remember this guy who got hired as a desktop technician. He was in his 30s, but in bad health, always red and sweaty and breathing hard. It took him forever to do the simplest task, like connecting a monitor or printer. He didn’t know much and was usually wrong, but he kept his job. I busted my butt to show I was serious and already had a good skill set, and would work my tail off to excel, and they couldn’t see past that I wasn’t male. So I got the message, mentally told them to eff off and stuck with freelancing.”

I’ve had the opportunity to meet and listen to Steve Wozniak several times over the years. He’s always funny and engaging, and his scriptless riffs get better all the time. With this one, he had me rolling in the aisle.

I’ve had the opportunity to meet and listen to Steve Wozniak several times over the years. He’s always funny and engaging, and his scriptless riffs get better all the time. With this one, he had me rolling in the aisle.