The word went out  Wednesday, March 22, spreading from techie to techie. “Better change your iCloud password, and change it fast.” What’s going on? According to ZDNet, “Hackers are demanding Apple pay a ransom in bitcoin or they’ll blow the lid off millions of iCloud account credentials.”

Wednesday, March 22, spreading from techie to techie. “Better change your iCloud password, and change it fast.” What’s going on? According to ZDNet, “Hackers are demanding Apple pay a ransom in bitcoin or they’ll blow the lid off millions of iCloud account credentials.”

A hacker group claims to have access to 250 million iCloud and other Apple accounts. They are threatening to reset all the passwords on those accounts – and then remotely wipe those phones using lost-phone capabilities — unless Apple pays up with untraceable bitcoins or Apple gift cards. The ransom is a laughably small $75,000.

What’s Happening at Apple?

According to various sources, at least some of the stolen account credentials appear to be legitimate. Whether that means all 250 million accounts are in peril, of course, is unknowable.

Apple seems to have acknowledged that there is a genuine problem. The company told CNET, “The alleged list of email addresses and passwords appears to have been obtained from previously compromised third-party services.” We obviously don’t know what Apple is going to do, or what Apple can do. It hasn’t put out a general call, at least as of Thursday, for users to change their passwords, which would seem to be prudent. It also hasn’t encouraged users to enable two-factor authentication, which should make it much more difficult for hackers to reset iCloud passwords without physical access to a user’s iPhone, iPad, or Mac.

Unless the hackers alter the demands, Apple has a two-week window to respond. From its end, it could temporarily disable password reset capabilities for iCloud accounts, or at least make the process difficult to automate, access programmatically, or even access more than once from a given IP address. So, it’s not “game over” for iCloud users and iPhone owners by any means.

It could be that the hackers are asking for such a low ransom because they know their attack is unlikely to succeed. They’re possibly hoping that Apple will figure it’s easier to pay a small amount than to take any real action. My guess is they are wrong, and Apple will lock them out before the April 7 deadline.

Where Did This Come From

Too many criminal networks have access to too much data. Where are they getting it? Everywhere. The problem multiplies because people reuse usernames and passwords. For nearly every site nowadays, the username is the email address. That means if you know my email address (and it’s not hard to find), you know my username for Facebook, for iCloud, for Dropbox, for Salesforce.com, for Windows Live, for Yelp. Using the email address for the login is superficially good for consumers: They are unlikely to forget their login.

The bad news is that account access now depends on a single piece of hidden information: the password. And people reuse passwords and choose weak passwords. So if someone steals a database from a major retailer with a million account usernames (which are email addresses) and passwords, many of those will also be Facebook logins. And Twitter. And iCloud.

That’s how hackers can quietly accumulate what they claim are 250 million iCloud passwords. They probably have 250 million email address / password pairs amalgamated from various sources: A million from this retailer, ten million from that social network. It adds up. How many of those will work in iTunes? Unknown. Not 250 million. But maybe 10 million? Or 20 million? Either way, it’s a nightmare for customers and a disaster for Apple, if those accounts are locked, or if phones are bricked.

What’s the Answer?

As long as we use passwords, and users have the ability to reuse passwords, this problem will exist. Hackers are excellent at stealing data. Companies are bad at detecting breaches, and even worse about disclosing them unless legally obligated to do so.

Can Apple present those 250 million accounts from being seized? Probably. Will problems like this happen again and again and again? For sure, until we move away from any possibility of shared credentials. And that’s not happening any time soon.

We received this realistic-looking email today claiming to be from a payment company called FrontStream. If you click the links, it tries to get you to active an account and provide bank details. However… We never requested an account from this company. Therefore, we label it phishing — and an attempt to defraud.

We received this realistic-looking email today claiming to be from a payment company called FrontStream. If you click the links, it tries to get you to active an account and provide bank details. However… We never requested an account from this company. Therefore, we label it phishing — and an attempt to defraud. Let’s talk about the practical application of artificial intelligence to cybersecurity. Or rather, let’s read about it. My friend

Let’s talk about the practical application of artificial intelligence to cybersecurity. Or rather, let’s read about it. My friend  Was the Russian government behind the 2004 theft of data on about 500 million Yahoo subscribers?

Was the Russian government behind the 2004 theft of data on about 500 million Yahoo subscribers?  To absolutely nobody’s surprise, the U.S. Central Intelligence Agency can spy on mobile phones. That includes Android and iPhone, and also monitor the microphones on smart home devices like televisions.

To absolutely nobody’s surprise, the U.S. Central Intelligence Agency can spy on mobile phones. That includes Android and iPhone, and also monitor the microphones on smart home devices like televisions. Cybercriminals want your credentials and your employees’ credentials. When those hackers succeed in stealing that information, it can be bad for individuals – and even worse for corporations and other organizations. This is a scourge that’s bad, and it will remain bad.

Cybercriminals want your credentials and your employees’ credentials. When those hackers succeed in stealing that information, it can be bad for individuals – and even worse for corporations and other organizations. This is a scourge that’s bad, and it will remain bad. Nothing you share on the Internet is guaranteed to be private to you and your intended recipient(s). Not on Twitter, not on Facebook, not on Google+, not using Slack or HipChat or WhatsApp, not in closed social-media groups, not via password-protected blogs, not via text message, not via email.

Nothing you share on the Internet is guaranteed to be private to you and your intended recipient(s). Not on Twitter, not on Facebook, not on Google+, not using Slack or HipChat or WhatsApp, not in closed social-media groups, not via password-protected blogs, not via text message, not via email. I can’t trust the Internet of Things. Neither can you. There are too many players and too many suppliers of the technology that can introduce vulnerabilities in our homes, our networks – or elsewhere. It’s dangerous, my friends. Quite dangerous. In fact, it can be thought of as a sort of Fifth Column, but not in the way many of us expected.

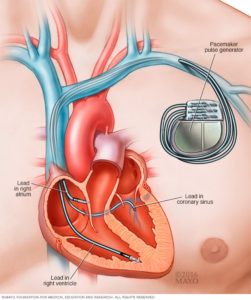

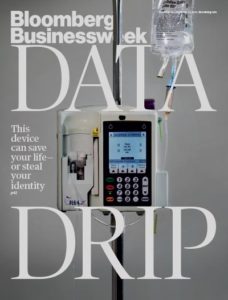

I can’t trust the Internet of Things. Neither can you. There are too many players and too many suppliers of the technology that can introduce vulnerabilities in our homes, our networks – or elsewhere. It’s dangerous, my friends. Quite dangerous. In fact, it can be thought of as a sort of Fifth Column, but not in the way many of us expected. Modern medical devices increasingly leverage microprocessors and embedded software, as well as sophisticated communications connections, for life-saving functionality. Insulin pumps, for example, rely on a battery, pump mechanism, microprocessor, sensors, and embedded software. Pacemakers and cardiac monitors also contain batteries, sensors, and software. Many devices also have WiFi- or Bluetooth-based communications capabilities. Even hospital rooms with intravenous drug delivery systems are controlled by embedded microprocessors and software, which are frequently connected to the institution’s network. But these innovations also mean that a software defect can cause a critical failure or security vulnerability.

Modern medical devices increasingly leverage microprocessors and embedded software, as well as sophisticated communications connections, for life-saving functionality. Insulin pumps, for example, rely on a battery, pump mechanism, microprocessor, sensors, and embedded software. Pacemakers and cardiac monitors also contain batteries, sensors, and software. Many devices also have WiFi- or Bluetooth-based communications capabilities. Even hospital rooms with intravenous drug delivery systems are controlled by embedded microprocessors and software, which are frequently connected to the institution’s network. But these innovations also mean that a software defect can cause a critical failure or security vulnerability. Think about alarm systems in cars. By default, many automobiles don’t come with an alarm system installed from the factory. That was for three main reasons: It lowered the base sticker price on the car; created a lucrative up-sell opportunity; and allowed for variations on alarms to suit local regulations.

Think about alarm systems in cars. By default, many automobiles don’t come with an alarm system installed from the factory. That was for three main reasons: It lowered the base sticker price on the car; created a lucrative up-sell opportunity; and allowed for variations on alarms to suit local regulations. Cloud-based firewalls come in two delicious flavors: vanilla and strawberry. Both flavors are software that checks incoming and outgoing packets to filter against access policies and block malicious traffic. Yet they are also quite different. Think of them as two essential network security tools: Both are designed to protect you, your network, and your real and virtual assets, but in different contexts.

Cloud-based firewalls come in two delicious flavors: vanilla and strawberry. Both flavors are software that checks incoming and outgoing packets to filter against access policies and block malicious traffic. Yet they are also quite different. Think of them as two essential network security tools: Both are designed to protect you, your network, and your real and virtual assets, but in different contexts. What’s the biggest tool in the security industry’s toolkit? The patent application. Security thrives on innovation, and always has, because throughout recorded history, the bad guys have always had the good guys at the disadvantage. The only way to respond is to fight back smarter.

What’s the biggest tool in the security industry’s toolkit? The patent application. Security thrives on innovation, and always has, because throughout recorded history, the bad guys have always had the good guys at the disadvantage. The only way to respond is to fight back smarter. Everyone has received those crude emails claiming to be from your bank’s “Secuirty Team” that tells you that you need to click a link to “reset you account password.” It’s pretty easy to spot those emails, with all the misspellings, the terrible formatting, and the bizarre “reply to” email addresses at domains halfway around the world. Other emails of that sort ask you to review an unclothed photo of a A-list celebrity, or open up an attached document that tells you what you’ve won.

Everyone has received those crude emails claiming to be from your bank’s “Secuirty Team” that tells you that you need to click a link to “reset you account password.” It’s pretty easy to spot those emails, with all the misspellings, the terrible formatting, and the bizarre “reply to” email addresses at domains halfway around the world. Other emails of that sort ask you to review an unclothed photo of a A-list celebrity, or open up an attached document that tells you what you’ve won. What’s on the industry’s mind? Security and mobility are front-and-center of the cerebral cortex, as two of the year’s most important events prepare to kick off.

What’s on the industry’s mind? Security and mobility are front-and-center of the cerebral cortex, as two of the year’s most important events prepare to kick off. The best way to have a butt-kicking cloud-native application is to write one from scratch. Leverage the languages, APIs, and architecture of the chosen cloud platform before exploiting its databases, analytics engines, and storage. As I wrote for Ars Technica, this will allow you to take advantage of the wealth of resources offered by companies like Microsoft, with their Azure PaaS (Platform-as-a-Service) offering or by Google Cloud Platform’s Google App Engine PaaS service.

The best way to have a butt-kicking cloud-native application is to write one from scratch. Leverage the languages, APIs, and architecture of the chosen cloud platform before exploiting its databases, analytics engines, and storage. As I wrote for Ars Technica, this will allow you to take advantage of the wealth of resources offered by companies like Microsoft, with their Azure PaaS (Platform-as-a-Service) offering or by Google Cloud Platform’s Google App Engine PaaS service. Las Vegas, January 2017 — “

Las Vegas, January 2017 — “ According to a recent study, 46% of the top one million websites are considered risky. Why? Because the homepage or background ad sites are running software with known vulnerabilities, the site was categorized as a known bad for phishing or malware, or the site had a security incident in the past year.

According to a recent study, 46% of the top one million websites are considered risky. Why? Because the homepage or background ad sites are running software with known vulnerabilities, the site was categorized as a known bad for phishing or malware, or the site had a security incident in the past year. “If you give your security team the work they hate to do day in and day out, you won’t be able to retain that team.”

“If you give your security team the work they hate to do day in and day out, you won’t be able to retain that team.”  Once upon a time, goes the story, there was honor between thieves and victims. They held a member of your family for ransom; you paid the ransom; they left you alone. The local mob boss demanded protection money; if you didn’t pay, your business burned down, but if you did pay and didn’t rat him out to the police, his and his gang honestly tried to protect you. And hackers may have been operating outside legal boundaries, but for the most part, they were explorers and do-gooders intending to shine a bright light on the darkness of the Internet – not vandals, miscreants, hooligans and ne’er-do-wells.

Once upon a time, goes the story, there was honor between thieves and victims. They held a member of your family for ransom; you paid the ransom; they left you alone. The local mob boss demanded protection money; if you didn’t pay, your business burned down, but if you did pay and didn’t rat him out to the police, his and his gang honestly tried to protect you. And hackers may have been operating outside legal boundaries, but for the most part, they were explorers and do-gooders intending to shine a bright light on the darkness of the Internet – not vandals, miscreants, hooligans and ne’er-do-wells. From company-issued tablets to BYOD (bring your own device) smartphones, employees are making the case that mobile devices are essential for productivity, job satisfaction, and competitive advantage. Except in the most regulated industries, phones and tablets are part of the landscape, but their presence requires a strong security focus, especially in the era of non-stop malware, high-profile hacks, and new vulnerabilities found in popular mobile platforms. Here are four specific ways of examining this challenge that can help drive the choice of both policies and technologies for reducing mobile risk.

From company-issued tablets to BYOD (bring your own device) smartphones, employees are making the case that mobile devices are essential for productivity, job satisfaction, and competitive advantage. Except in the most regulated industries, phones and tablets are part of the landscape, but their presence requires a strong security focus, especially in the era of non-stop malware, high-profile hacks, and new vulnerabilities found in popular mobile platforms. Here are four specific ways of examining this challenge that can help drive the choice of both policies and technologies for reducing mobile risk. When an employee account is compromised by malware, the malware establishes a foothold on the user’s computer – and immediately tries to gain access to additional resources. It turns out that with the right data gathering tools, and with the right Big Data analytics and machine-learning methodologies, the anomalous network traffic caused by this activity can be detected – and thwarted.

When an employee account is compromised by malware, the malware establishes a foothold on the user’s computer – and immediately tries to gain access to additional resources. It turns out that with the right data gathering tools, and with the right Big Data analytics and machine-learning methodologies, the anomalous network traffic caused by this activity can be detected – and thwarted. Medical devices are incredibly vulnerable to hacking attacks. In some cases it’s because of software defects that allow for exploits, like buffer overflows, SQL injection or insecure direct object references. In other cases, you can blame misconfigurations, lack of encryption (or weak encryption), non-secure data/control networks, unfettered wireless access, and worse.

Medical devices are incredibly vulnerable to hacking attacks. In some cases it’s because of software defects that allow for exploits, like buffer overflows, SQL injection or insecure direct object references. In other cases, you can blame misconfigurations, lack of encryption (or weak encryption), non-secure data/control networks, unfettered wireless access, and worse. Be paranoid! When you visit a website for the first time, it can learn a lot about you. If you have cookies on your computer from one of the site’s partners, it can see what else you have been doing. And it can place cookies onto your computer so it can track your future activities.

Be paranoid! When you visit a website for the first time, it can learn a lot about you. If you have cookies on your computer from one of the site’s partners, it can see what else you have been doing. And it can place cookies onto your computer so it can track your future activities. Nothing is scarier than getting together with a buyer (or a seller) to exchange dollars for a product advertised on Craig’s List, eBay or another online service… and then be mugged or robbed. There are certainly plenty of news stories on this subject, but the danger continues. Here are some recent reports:

Nothing is scarier than getting together with a buyer (or a seller) to exchange dollars for a product advertised on Craig’s List, eBay or another online service… and then be mugged or robbed. There are certainly plenty of news stories on this subject, but the danger continues. Here are some recent reports: Can someone steal the data off your old computer? The short answer is yes. A determined criminal can grab the bits, including documents, images, spreadsheets, and even passwords.

Can someone steal the data off your old computer? The short answer is yes. A determined criminal can grab the bits, including documents, images, spreadsheets, and even passwords. Here’s a popular article that I wrote on email security for Sophos’ “Naked Security” blog.

Here’s a popular article that I wrote on email security for Sophos’ “Naked Security” blog.  When it comes to cars, safety means more than strong brakes, good tires, a safety cage, and lots of airbags. It also means software that won’t betray you; software that doesn’t pose a risk to life and property; software that’s working for you, not for a hacker.

When it comes to cars, safety means more than strong brakes, good tires, a safety cage, and lots of airbags. It also means software that won’t betray you; software that doesn’t pose a risk to life and property; software that’s working for you, not for a hacker. News websites are an irresistible target for hackers because they are so popular. Why? because they are trusted brands, and because — by their very nature — they contain many external links and use lots of outside content providers and analytics/tracking services. It doesn’t take much to corrupt one of those websites, or one of the myriad partners sites they rely upon, like ad networks, content feeds or behavioral trackers.

News websites are an irresistible target for hackers because they are so popular. Why? because they are trusted brands, and because — by their very nature — they contain many external links and use lots of outside content providers and analytics/tracking services. It doesn’t take much to corrupt one of those websites, or one of the myriad partners sites they rely upon, like ad networks, content feeds or behavioral trackers. Thank you, NetGear, for taking care of your valued customers. On July 1, the company announced that it would be shutting down the proprietary back-end cloud services required for its VueZone cameras to work – turning them into expensive camera-shaped paperweights. See “

Thank you, NetGear, for taking care of your valued customers. On July 1, the company announced that it would be shutting down the proprietary back-end cloud services required for its VueZone cameras to work – turning them into expensive camera-shaped paperweights. See “