Summary of Recall Trends. Source: SRR.

The costs of an automobile recall can be immense for an OEM automobile or light truck manufacturer – and potentially ruinous for a member of the industry’s supply chain. Think about the ongoing Takata airbag scandal, which Bloomberg says could cost US$24 billion. General Motors’ ignition locks recall may have reached $4.1 billion. In 2001, the exploding Firestone tires on the Ford Explorer cost $3 billion to recall. The list goes on and on. That’s all about hardware problems. What about bits and bytes?

Until now, it’s been difficult to quantify the impact of software defects on the automotive industry. Thanks to a new analysis from SRR called “Industry Insights for the Road Ahead: Automotive Warranty and Recall Report 2016,” we have a good handle on this elusive area.

According to the report, there were 63 software- related vehicle recalls from late 2012 to June 2015. That’s based on data from the United States’ National Highway Traffic Safety Administration (NHTSA). The SRR report derived that count of 63 software-related recalls using this methodology (p. 22),

To classify a recall as a software component recall, SRR searched the “Defect Summary” and “Corrective Action” fields of NHTSA’s Recall flat file for the term “software.” SRR’s inquiry captured descriptions of software-related defects identified specifically as such, as well as defects that were to be fixed by updating or changing a vehicle’s software.

That led to this analysis (p. 22),

Since the end of 2012, there has been a marked increase in recall activity due to software issues. For the primary light vehicle makes and models we studied, 32 unique software-related recalls affected about 3.6 million vehicles from 2005–2012. However, in a much shorter time period from the end of 2012 to June 2015, there were 63 software-related recalls affecting 6.4 million more vehicles.

And continuing (p. 23),

From less than 5 percent of all recalls in 2011, software-related recalls have risen to almost 15 percent in 2015. Overall, the amount of unique campaigns involving software has climbed dramatically, with nine times as many in 2015 than in 2011…

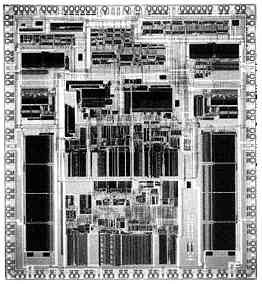

No surprises there given the dramatically increased complexity of today’s connected vehicles, with sophisticated internal networks, dozens of ECUs (electronic control units with microprocessors, memory, software and network connections), and extensive remote connectivity.

These software defects are not occurring only in systems where one expects to find sophisticated microprocessors and software, such as engine management controls and Internet-connected entertainment platforms. Microprocessors are being used to analyze everything from the driver’s position and stage of alert, to road hazards, to lane changes — and offer advanced features such as automatic parallel parking.

Where in the car are the software-related vehicle recalls? Since 2006, says the report, recalls have been prompted by defects in areas as diverse as locks/latches, power train, fuel system, vehicle speed control, air bags, electrical systems, engine and engine cooling, exterior lighting, steering, hybrid propulsion – and even the parking brake system.

That’s not all — because not every software defect results in a public and costly recall. That’s the last resort, from the OEM’s perspective. Whenever possible, the defects are either ignored by the vehicle manufacturer, or quietly addressed by a software update next time the car visits a dealer. (If the car doesn’t visit an official dealer for service, the owner may never know that a software update is available.) Says the report (p. 25),

In addition, SRR noted an increase in software-related Technical Service Bulletins (TSB), which identify issues with specific components, yet stop short of a recall. TSBs are issued when manufacturers provide recommended procedures to dealerships’ service departments for fixing problematic components.

A major role of the NHTSA is to record and analyze vehicle failures, and attempt to determine the cause. Not all failures result in a recall, or even in a TSB. However, they are tracked by the agency via Early Warning Reporting (EWR). Explains the report (p. 26),

In 2015, three new software-related categories reported data for the first time:

• Automatic Braking, listed on 21 EWR reports, resulting in 26 injuries and 1 fatality

• Electronic Stability, listed on 6 EWR reports, resulting in 7 injuries and 1 fatality

• Forward Collision Avoidance, listed in 1 EWR report, resulting in 1 injury and no fatalities

The bottom line here, beyond protecting life and property, is the bottom line for the automobile and its supply chain. As the report says in its conclusion (p. 33),

Suppliers that help OEMs get the newest software-aided components to market should be prepared for the increased financial exposure they could face if these parts fail.

About the Report

Industry Insights for the Road Ahead: Automotive Warranty and Recall Report 2016” was published by SRR: Stout, Risius Ross, which offers global financial advisory services. SRR has been in the automotive industry for 25 years, and says, “SRR professionals have more automotive experience in these service areas than any other advisory firm, period.”

This brilliant report — which is free to download in its entirety — was written by Neil Steinkamp, a Managing Director at SRR. He has extensive experience in providing a broad range of business and financial advice to corporate executives, risk managers, in-house counsel and trial lawyers. Mr. Steinkamp has provided consulting services and has been engaged as an expert in numerous matters involving automotive warranty and recall costs. His practice also includes consulting services for automotive OEMs, suppliers and their advisors regarding valuation, transactions and disputes.

Was it a software failure? The recent fatal crash of a Tesla in Autopilot mode is worrisome, but it’s too soon to blame Tesla’s software. According to Tesla on June 30, here’s what happened:

Was it a software failure? The recent fatal crash of a Tesla in Autopilot mode is worrisome, but it’s too soon to blame Tesla’s software. According to Tesla on June 30, here’s what happened:

Connected cars are vulnerable due to the radios that link them to the outside world. For example, consider cellular data links, such as the one in the Mercedes M-class SUV that my family owned for a while, allow for remote access to more than diagnostics: Using the system, called

Connected cars are vulnerable due to the radios that link them to the outside world. For example, consider cellular data links, such as the one in the Mercedes M-class SUV that my family owned for a while, allow for remote access to more than diagnostics: Using the system, called  There are several types of dangers presented by a lost Bring Your Own Device (BYOD) smartphone or tablet. Many IT professionals and security specialists think only about some of them. They are all problematic. Does your company have policies about lost personal devices?

There are several types of dangers presented by a lost Bring Your Own Device (BYOD) smartphone or tablet. Many IT professionals and security specialists think only about some of them. They are all problematic. Does your company have policies about lost personal devices? I once designed and coded a campus parking pass management system for an East Coast university. If you had a faculty, staff, student or visitor parking sticker for the campus, it was processed using my green-screen application, which went online in 1983. The university used the mainframe program with minimal changes for about a decade, until a new client/server parking system was implemented.

I once designed and coded a campus parking pass management system for an East Coast university. If you had a faculty, staff, student or visitor parking sticker for the campus, it was processed using my green-screen application, which went online in 1983. The university used the mainframe program with minimal changes for about a decade, until a new client/server parking system was implemented. Security standards for cellular communications are pretty much invisible. The security standards, created by groups like the 3GPP, play out behind the scenes, embedded into broader cellular protocols like 3G, 4G, LTE and the oft-discussed forthcoming 5G. Due to the nature of the security and other cellular specs, they evolve very slowly and deliberately; it’s a snail-like pace compared to, say, WiFi or Bluetooth.

Security standards for cellular communications are pretty much invisible. The security standards, created by groups like the 3GPP, play out behind the scenes, embedded into broader cellular protocols like 3G, 4G, LTE and the oft-discussed forthcoming 5G. Due to the nature of the security and other cellular specs, they evolve very slowly and deliberately; it’s a snail-like pace compared to, say, WiFi or Bluetooth. Ransomware is a huge problem that causes real harm to businesses and individuals. Technology service providers are gearing up to fight these cyberattacks – and that’s coming none too soon.

Ransomware is a huge problem that causes real harm to businesses and individuals. Technology service providers are gearing up to fight these cyberattacks – and that’s coming none too soon. I am hoovering directly from the blog of my friend

I am hoovering directly from the blog of my friend

The Panama Papers should be a wake-up call to every CEO, COO, CTO and CIO in every company.

The Panama Papers should be a wake-up call to every CEO, COO, CTO and CIO in every company. Barcelona, Mobile World Congress 2016—IoT success isn’t about device features, like long-life batteries, factory-floor sensors and snazzy designer wristbands. The real power, the real value, of the Internet of Things is in the data being transmitted from devices to remote servers, and from those remote servers back to the devices.

Barcelona, Mobile World Congress 2016—IoT success isn’t about device features, like long-life batteries, factory-floor sensors and snazzy designer wristbands. The real power, the real value, of the Internet of Things is in the data being transmitted from devices to remote servers, and from those remote servers back to the devices.

Software-defined networks and Network Functions Virtualization will redefine enterprise computing and change the dynamics of the cloud. Data thefts and professional hacks will grow, and development teams will shift their focus from adding new features to hardening against attacks. Those are two of my predictions for 2015.

Software-defined networks and Network Functions Virtualization will redefine enterprise computing and change the dynamics of the cloud. Data thefts and professional hacks will grow, and development teams will shift their focus from adding new features to hardening against attacks. Those are two of my predictions for 2015. For development teams, cloud computing is enthralling. Where’s the best place for distributed developers, telecommuters and contractors to reach the code repository? In the cloud. Where do you want the high-performance build servers? At a cloud host, where you can commandeer CPU resources as needed. Storing artifacts? Use cheap cloud storage. Hosting test harness? The cloud has tremendous resources. Load testing? The scales. Management of beta sites? Cloud. Distribution of finished builds? Cloud. Access to libraries and other tools? Other than the primary IDE itself, cloud. (I’m not a fan of working in a browser, sorry.)

For development teams, cloud computing is enthralling. Where’s the best place for distributed developers, telecommuters and contractors to reach the code repository? In the cloud. Where do you want the high-performance build servers? At a cloud host, where you can commandeer CPU resources as needed. Storing artifacts? Use cheap cloud storage. Hosting test harness? The cloud has tremendous resources. Load testing? The scales. Management of beta sites? Cloud. Distribution of finished builds? Cloud. Access to libraries and other tools? Other than the primary IDE itself, cloud. (I’m not a fan of working in a browser, sorry.) Drones are everywhere. Literally. My friend Steve, a wedding photographer, always includes drone shots. Drones are used by the military, of course, as well as spy agencies. They are used by public service agencies, like fire departments. By real estate photographers who want something better than Google Earth. By farmers checking on their fences. By security companies to augment foot patrols. And by Hollywood filmmakers, who

Drones are everywhere. Literally. My friend Steve, a wedding photographer, always includes drone shots. Drones are used by the military, of course, as well as spy agencies. They are used by public service agencies, like fire departments. By real estate photographers who want something better than Google Earth. By farmers checking on their fences. By security companies to augment foot patrols. And by Hollywood filmmakers, who

Malicious agents can crash a website by implementing a DDoS—a Distributed Denial of Service Attack—against a server. So can sloppy programmers.

Malicious agents can crash a website by implementing a DDoS—a Distributed Denial of Service Attack—against a server. So can sloppy programmers. “My name is Patricia from the Bank of America fraud prevention department. This important message is for Mr. Alan Zeichick. We are calling to verify some potentially suspicious activity on your account. It is very important that we speak with you.”

“My name is Patricia from the Bank of America fraud prevention department. This important message is for Mr. Alan Zeichick. We are calling to verify some potentially suspicious activity on your account. It is very important that we speak with you.” SAN FRANCISCO — I expected a new version of OS X, the operating system for Mac desktops and notebooks. I expected a new version of iOS, the operating system for iPhones and iPads. I did not expect a new programming language. Yet that’s what we got at Apple’s

SAN FRANCISCO — I expected a new version of OS X, the operating system for Mac desktops and notebooks. I expected a new version of iOS, the operating system for iPhones and iPads. I did not expect a new programming language. Yet that’s what we got at Apple’s

Welcome to my blog. It has to start somewhere, and this is where it starts. And the trek had to start sometime; it should have started a long time ago, but it didn’t, so here we are.

Welcome to my blog. It has to start somewhere, and this is where it starts. And the trek had to start sometime; it should have started a long time ago, but it didn’t, so here we are.